OPINION — Last December, the Office of the Director of National Intelligence (ODNI) declassified an Intelligence Community Assessment and a memorandum on how foreign adversaries attempted to influence 2022 U.S. midterm elections. The reports outlined how the People’s Republic of China (PRC), Russia, Iran, and other authoritarian states attempted various covert influence efforts targeting U.S. voters.

The urgent warning about the very real risk for foreign malign influence comes as we have begun what is being called the biggest election year in history. More than 70 elections will occur around the world (including 6 of the 10 most populous countries), with over 4 billion people eligible to vote. As voters go to polls, the proliferation of generative AI tools will make it easier for malicious actors to attempt to influence elections. The democratization of technologies like ChatGPT, LLaMa, DALL-E, and many other open source models are dual-edged: the benefits they provide also come with the ability for potential adversaries to harness AI for their own malicious intent.

The advent of generative AI tools creates avenues for risks in a few ways. While the models are known to proliferate access to more content on a faster scale, GenAI tools are also capable of creating believable fake content. One recent study from December 2023 found that participants accurately detected deepfakes around 60% of the time. Beyond deepfake images, GenAI tools can be used to diversify text-based disinformation and target users with more convincing fake content for adversarial information operations. Given these trends, a majority of US adults (58%) think AI will increase the spread of misinformation in the 2024 elections. There is a possibility that AI-generated or AI-altered content can easily flood newsfeeds and make it difficult for users to identify the authenticity of information around candidates, voting information, and other components of the democratic process.

It's not just for the President anymore. Are you getting your daily national security briefing? Subscriber+Members have exclusive access to the Open Source Collection Daily Brief, keeping you up to date on global events impacting national security. It pays to be a Subscriber+Member.

Countries such as China already demonstrated intent to use such tools to disrupt elections. Beijing’s recent efforts to interfere in the Taiwanese election is a prime example. In early January, one Taiwanese group used a large language model to identify a series of online behaviors consistent with Chinese state media. The analysis included two notably large–and likely AI-enabled–Facebook groups (#61009 and #61019) focused on Taiwan’s elections within their respective networks of 439 troll accounts operating 4,840 stories and 170 troll accounts operating 4,804 stories. Suspected online influence is not the only way that the PRC has attempted to interfere with Taiwan’s election. The Taiwanese government has accused Beijing of trying to sway Taiwanese officials, using economic levers to influence behavior, and employing grey-zone tactics to intimidate the island in addition to meddling in the information domain.

The use of AI-enabled influence operations alongside other influence efforts in Taiwan is not isolated. In recent years, the Chinese Communist Party (CCP) has advanced the concept of cognitive warfare as a means to achieve victory by influencing the minds of opponents. Beyond directly targeting elections, efforts in line with cognitive warfare concepts include launching AI-enhanced campaigns like Spamouflage Dragon targeted at the United States, the sharing of synthetic content after the Maui wildfires, continued United Front Work, and the use of foreign influencers to shape narratives about the PRC globally.

In addition to the PRC, Russia and Iran are also known actors in this space. Over the span of 2023, there were various reports of attempted influence in elections globally. Recorded Future revealed how a Russian-connected Döppelganger network published fake election information online and Microsoft identified at least 5 cyber-enabled Iranian influence operations – some of which are connected to the Cotton Sandstorm network believed to have meddled in the U.S. 2020 elections. When taken in tandem with the PRC efforts in Taiwan noted above and previously reported PRC attempts to influence elections in Canada and Australia, these examples of authoritarian influence efforts represent a very real threat to democratic interests: widespread, tech-enabled foreign malign influence with the potential to impact elections.

Taiwan’s recent experience illustrates how important it is to effectively organize against foreign malign influence. For the United States and other democracies, it’s a signal that AI-enabled foreign influence is coming this year but can also be countered through concerted efforts by governments and citizens alike. Taiwan has notably countered disinformation through public dissemination of information, the use of AI tools to identify and track threats, and public resilience efforts.

Looking for a way to get ahead of the week in cyber and tech? Sign up for the Cyber Initiatives Group Sunday newsletter to quickly get up to speed on the biggest cyber and tech headlines and be ready for the week ahead. Sign up today.

Within the United States, protecting the electorate from malign foreign influence falls to several different departments and agencies. While in theory they collectively should have the means to protect us, the reality is that they lack sufficient coordination and guidance, are under-resourced, and are not organized to take action when foreign information threats emerge. Among them, the Intelligence Community (IC) provides our ability to scan the horizon for foreign-based information threats. It should be able to detect AI-generated fake content from foreign malign actors and understand their capabilities and intentions. Cyberdefense and law enforcement agencies additionally tackle various tasks around detection and risk management and investigation, respectively. However, all these efforts are ill-equipped to detect and prevent this activity, given what likely will be a steep rise in the volume of malign content this year.

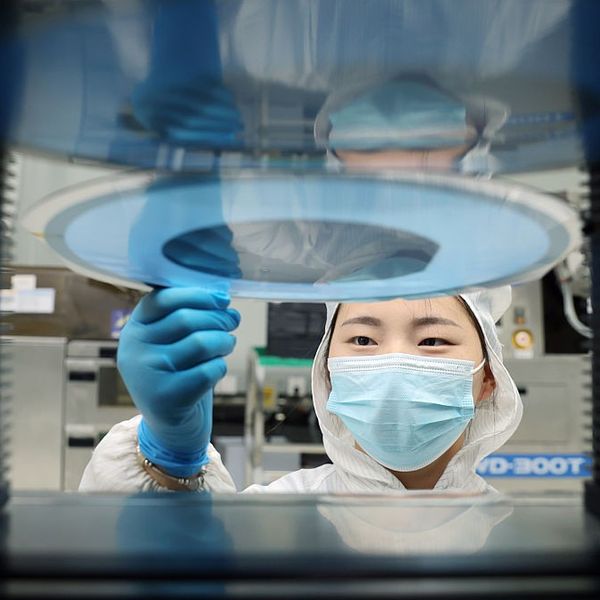

As it currently stands, the United States is not prepared to protect against anticipated levels of foreign malign interference. It will have to bolster its efforts to counter foreign malign influence across departments and agencies to be effective. Notably, this means tailoring IC activities to support national security interests writ large given its centrality to counter foreign threats. The IC can do this by unifying its collection and analysis entities under intentional leadership through an action arm such as a coordination entity. One such option would be to build upon the previously NSCAI-recommended 24/7 tech-enabled Joint Interagency Task Force. A second complementary option would be for the U.S. government to integrate AI tools at scale. One way that AI models can support efforts is by spotting and flagging anticipated campaigns for removal through bot detection, such is done at the Taiwan AI Labs in real time. Elements could also deploy Natural Language Processing (NLP) techniques that sift through foreign-originating data to carry out sentiment analysis, thereby saving analysts time needed for other tasks.

While there are some tools and techniques that fit some missions better than others, the United States and allies and partners should focus on how they can adopt foundational capabilities to help them achieve the counter foreign malign influence mission faster across the board. To maintain democratic integrity against evolving AI-enabled threats, democracies must be prepared to embrace new tools.

Who’s Reading this? More than 500K of the most influential national security experts in the world. Need full access to what the Experts are reading?

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals.

Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief