EXCLUSIVE SUBSCRIBER+MEMBER INTERVIEW — On the same day that a group of industry leaders warned of the “risk of extinction” that stems from unbridled artificial intelligence, Congressman Seth Moulton (D-MA) sat down for a wide-ranging interview with The Cipher Brief about its effects on war-fighting, autonomous weaponry, and why a Geneva Convention for AI is needed before it is too late.

“[This warning] is a meaningful warning because it's coming from the industry leaders who stand to profit off AI the most,” said Moulton. “If they're willing to curtail their own business to put guardrails around their product, all of us should pay attention.”

A draft House bill intended to accelerate the Pentagon’s use and governance of AI, meanwhile, is currently working its way through Congress.

Moulton, however, says he has concerns.

“In just the last week, I've spoken with two service chiefs and the author of that bill about its implications,” he said. “While it's certainly well intended, and the Pentagon is unquestionably way behind, I'm concerned that top-down Pentagon regulation could actually slow us down further. And so I've been privately encouraging service chiefs to just go out and get ahead of the Pentagon on this; be the first service to effectively innovate and adopt AI. While that less coordinated effort may not be ideal in terms of containing top cost or duplicative work, I actually think that competitive innovation is what the Pentagon needs to catch up.”

The transcript below has been edited for length and clarity.

TIMELINE:

- 1956 - The Dartmouth Summer Research Project on Artificial Intelligence is convened, widely considered to be a founding event of artificial intelligence as a field.

- 1957 - Frank Rosenblatt builds early artificial neural network that carries out pattern recognition based on a two-layer computer learning network. The New York Times called it "the embryo of an electronic computer that [the Navy] expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence … a remarkable machine… capable of what amounts to thought.”

- 1959 - Arthur Samuel devised the term “machine learning,” through computer programming in an effort to best a human effort in checkers.

- 1968 - The film 2001: Space Odyssey is released, which showcases a supposed sentient computer, named HAL 9000

- 1985 - Procter & Gamble makes use of the first business intelligence system to used in sales information and retail.

- 1993 - Vernor Vinge, science fiction author and professor, publishes “The Coming Technological Singularity,” predicting that “within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.”

- 1997 - Deep Blue develops the first chess-playing program to defeat a world chess champion.

- 2000 - Kismet, a robot that recognizes and simulate emotions, is developed by Cynthia Breazeal, robotics scientist and entrepreneur.

- 2006 - South Korea unveils plans for sentry robots along the Demilitarized Zone, capable of fully autonomous tracking and targeting.

- 2009 - The Intelligent Information Laboratory at Northwestern University create Stats Monkey, which autonomously write sport news stories.

- 2011 - IBM’s Watson competes on Jeopardy! and wins against two former champions.

- 2012 - The Pentagon puts forth a directive designed to “minimize the probability and consequences of failures in autonomous and semi-autonomous weapon systems,” and requires “appropriate levels of human judgment” be over robots that employ deadly force.

- 2013 - The X-47B unmanned combat aircraft lands on the USS George H.W. Bush, becoming the first unmanned autonomous craft to touch down on an aircraft carrier.

- 2016 - Google DeepMind's AlphaGo beats Go champion Lee Sedol.

- 2022 - GPT-3, short for Generative Pre-trained Transformer, is introduced.

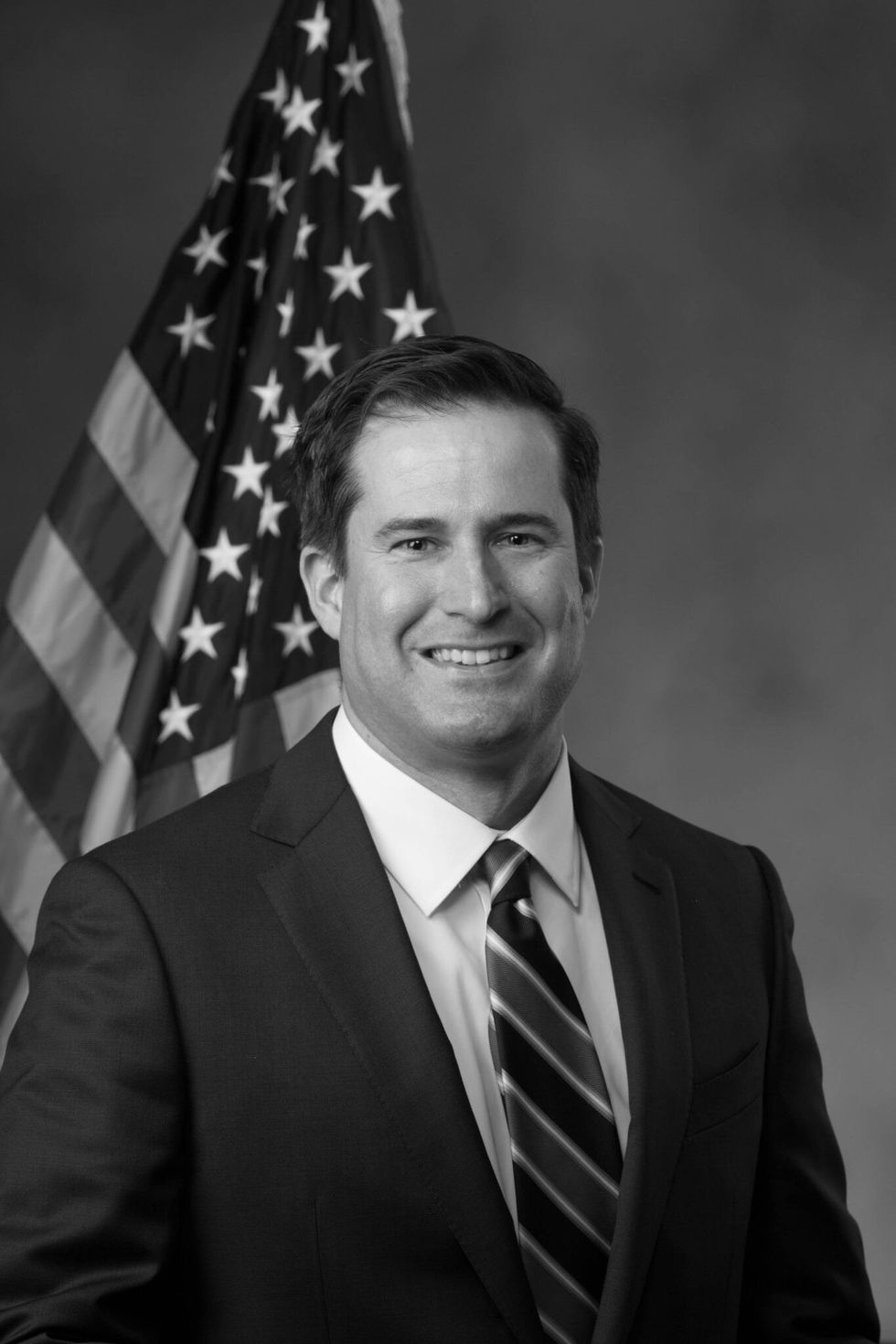

Seth Moulton, U.S. Congressman (D-MA)

Congressman Seth Moulton (D-MA) represents Massachusetts’ 6th Congressional District. A Marine Corps veteran who served four infantry combat tours in Iraq, he currently sits on the House Armed Services Committee, where he is Ranking Member on the Subcommittee on Strategic Forces; as well as the newly formed House Select Committee on the Strategic Competition Between the U.S. and the Chinese Communist Party. Moulton earned degrees in physics, business, and public administration from Harvard University

The Cipher Brief: Leading industry researchers and tech executives working in artificial intelligence have just warned of a possible existential threat to humanity accompanying this burgeoning tech. What do you make of that? And what can we do about it?

Moulton: First of all, it's a meaningful warning because it's coming from the industry leaders who stand to profit off AI the most. If they're willing to curtail their own business to put guardrails around their product, all of us should pay attention. Second, I wonder where they were three years ago, or even five years ago, when a few of us were trying to get more attention to this. After all, they're the ones developing this technology. They should have been thinking more clearly and forthrightly about its implications.

The Cipher Brief: What does this then suggest should be done, or can be done? The “can be done” is perhaps the bigger question.

Moulton: It's an important point because I've already seen some AI experts throw up their hands and say, the government is so behind with all of this stuff. We haven't even passed a data protection law like Europe did years ago. How are we going to regulate AI? I think that's a legitimate concern, but it is absolutely not an excuse for failing to try. I think the strategy has to be the focus on the most dangerous cases, rather than try to regulate the industry as a whole and try to stay ahead of every innovation. Because I agree, that sounds hopeless.

The Cipher Brief: Given there are currently only a handful of players focused on the most advanced versions of AI, which lead to existential questions about threats to humanity, is emphasis oriented toward a more targeted-type of regulation?

Moulton: I think the big concern is that our adversaries are focused on some of these things. Just viewing this again as an American-centric problem, because we are currently leading, is completely missing the forest for the trees. The only way to address the existential threats to humanity is for all of us to come together. While that's hard, there is significant precedent for doing so. The way the world united to limit the proliferation and use of nuclear weapons, shortly after that technology was discovered by the United States, is a prime example. Much of that effort was led by the scientists themselves, but it culminated in international agreements that have largely stood the test of time. And they've been successful in preventing the use of nuclear weapons ever since. There are more recent examples, such as prohibiting the use of blinding laser weapons, which are relatively easy to produce with today's technology. But armies across the globe, friend and foe alike, don't use them. Even with the tensions between the United States and Russia and China, we should pursue this kind of agreement aggressively. And there's good reason to hope we can have some success.

The Cipher Brief: Does the politics concern you? These are certainly different times than when the Geneva Convention was forged, both internationally and domestically in terms of the level of political polarization and capacity to get bipartisan legislation accomplished?

Moulton: Yes. Politics are always concerning, both domestically and internationally, and I worry that we may have to suffer a terrible disaster before people appreciate the seriousness of the warnings that myself and others have issued. But again, that doesn't mean we shouldn't try. I think one of the best things I can do for my two and four-year-old daughters right now is work as hard as I can at limiting the dangers of artificial intelligence. Because they're going to live in a world where AI is everywhere, and we don't want AI or the worst people on Earth to use AI, to have the upper hand.

Looking for a way to get ahead of the week in cyber and tech? Sign up for the Cyber Initiatives Group Sunday newsletter to quickly get up to speed on the biggest cyber and tech headlines and be ready for the week ahead. Sign up today.

The Cipher Brief: In that vein, you've talked about the importance of early adopters and precedent setters, as well as these ethical and moral guardrails for how AI is used. What does that look like to you in practice, and where are your principal concerns, both in terms of the technology, but also in terms of the adversary?

Moulton: These are excellent questions and I don't pretend to know all the answers myself. First of all, this is exactly where we need to have a vigorous debate with experts around the globe. But my quick take is that there are two principle concerns: The first is having AI that makes life or death decisions on its own: AI that kills without human, and therefore moral, decision making. The second is having AI that's programmed to disregard long-held conventions, how we fight wars, conventions like the Geneva Conventions, [which work toward] limiting civilian casualties and collateral damage. There are probably more principles out there, but those are the first two that seemed essential to me.

The Cipher Brief: You are pressing, as a part of your role in the House Armed Service Committee, the Pentagon to move faster on this. I know there's a draft AI bill that's working its way through the House. What is it going to take to get that passed? What will it do? I’m wondering more about the internal mechanics of that process.

Moulton: In just the last week I've spoken with two service chiefs and the author of that bill about its implications. While it's certainly well intended, and the Pentagon is unquestionably way behind, I'm concerned that top-down Pentagon regulation could actually slow us down further and so I've been privately encouraging service chiefs to just go out and get ahead of the Pentagon on this; be the first service to effectively innovate and adopt AI. While that less coordinated effort may not be ideal in terms of containing overall cost or duplicative work, I actually think that competitive innovation is what the Pentagon needs to catch up.

The Cipher Brief: How would that work in practice?

Moulton: The Marine Corps set a good example with Force Design 2030, where the Commandant and his team laid out a strategy to address the threat of China in the Pacific, frankly, in the absence of guidance from the secretary or others at DOD. Now, the other services are scrambling to catch up and the Pentagon has recognized that the Marine Corps is leading us in the right direction. But it took that initiative from the Marine Corps to get it done. It never came at the direction of higher ups at DOD.

The Cipher Brief hosts expert-level briefings on national security issues for Subscriber+Members that help provide context around today’s national security issues and what they mean for business. Upgrade your status to Subscriber+ today.

The Cipher Brief: There is some competition as well, not only in China, but also in Europe where a draft EU AI act is inching closer to becoming law. I wonder how that plays out into the calculus of precedent setters that you've talked about?

Moulton: I give a lot of credit to the EU for leading on these tech issues. I'm not saying they're doing everything right, but they're clearly making more progress than we are. At the Munich Security Conference I was pressed by some EU lawmakers on why United States is so behind on these issues and just wants to let big tech get away with everything. I pointed out that we're definitely behind and a lot of my colleagues in Congress are to blame. But the American people seem to agree with the EU that we want some guardrails around things like data privacy and big tech, and I think this is another case where the American people probably want some guardrails. But our political leadership is behind. I'm struck by how many people have come up to me and commented on my AI op-ed. And not what I would've guessed were the likely suspects, not necessarily younger, techy people. I had an almost 90-year-old veteran at the Memorial Day Parade in my home come up to me yesterday and tell me how important my op-ed was. I think the American people are quickly waking up to the dangers. The question is whether leaders in Congress and the State Department and DOD and elsewhere will have the courage to act quickly.

The Cipher Brief: Back in 2020, you co-chaired the future of Defense Task Force, which published a report that recommended the Pentagon to get rid of certain legacy weapons systems, and better embrace emerging technologies. That was before Ukraine. I'm wondering how the math has changed since then, or has it?

Moulton: Ukraine has completely validated our conclusion in the future of defense task force, for two primary reasons. One, the reason the Ukrainians are actually winning this war against all odds, is because they're embracing these innovations and Russia is still fighting like it's 1985. Second, the degree to which both sides are not embracing the innovations and are still in trench lines and fighting artillery battles in parts of the country, the sheer cost of that World War I style, attrition warfare. It's further proof that's not the kind of war we want to be fighting.

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals.

Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspectives and analysis in The Cipher Brief because National Security is Everyone’s Business