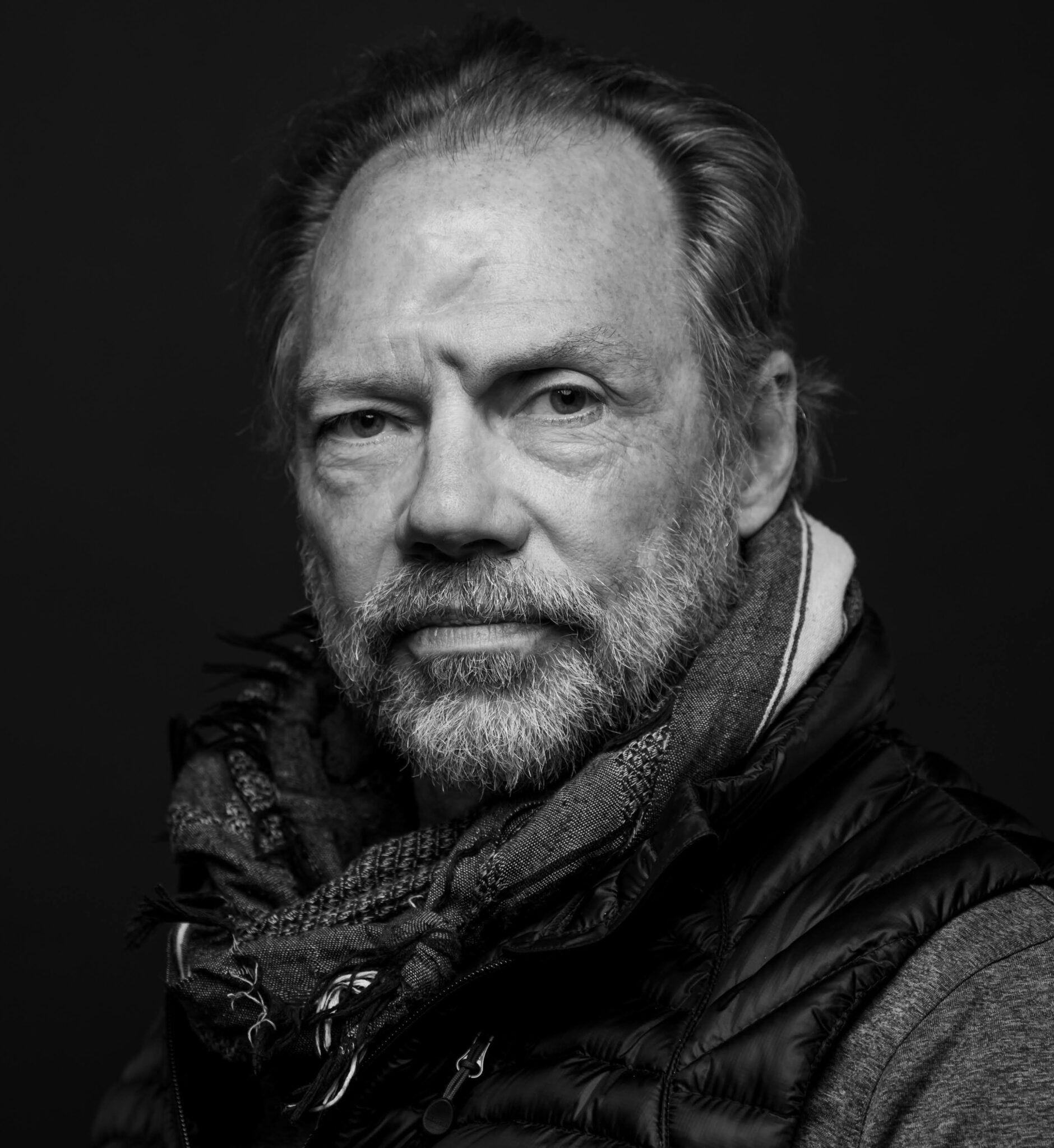

Cipher Brief Expert Thomas Donahue retired from CIA after 32 years of service. He served as the Chief Editor of the President’s Daily Brief and other CIA daily production during the second term of the Clinton administration, and spent the last 18 years of his career focused on cyber threats as a manager and senior analyst in what is now known as the Center for Cyber Intelligence.

Donahue served four years at the White House during the Bush and Obama administrations, most recently as the senior director for cyber operations for the National Security Council staff. During his last two years, he was the research director at the DNI’s Cyber Threat Intelligence Integration Center. He recently presented at The Cipher Brief's public-private, engagement-focused 2019 Threat Conference. What follows is a written version of his presentation.

The long and winding road

That leads to your door

Will never disappear

I've seen that road before

It always leads me here

Lead me to your door

The wild and windy night

That the rain washed away

Has left a pool of tears

Crying for the day

Why leave me standing here

Let me know the way

— Lennon and McCartney, 1970

Author's Note: I am old, cranky, and tired. You should know this from my taste in music. So please forgive bluntness that may stray into some slight hyperbole or fantasy. I am trying to make a point …

Since at least the time of the 1996-7 Presidential Commission on Critical Infrastructure Protection, we have been having the same conversation about the dangers posed by the Internet to the foundational capabilities of our economy and national security. But ever since then, we have approached this from a tactical point of view that treats symptoms rather than root causes. We ignore basic security principles, we underestimate the true impact of cyber threats on real-world processes, and thus we fail to appreciate the risks, and we fail to allocate the necessary resources for designing inherently secure business processes and technology architectures. Some have observed that a failure in new 5G telecommunications technology could result in the failure of society because the technology will be so ingrained in every critical aspect necessary to sustain our increasingly complex, interdependent infrastructures. If so, then shame on us if we allow this to go forward in this fashion. Let me know the way …

We have understood basic security principles for a very long time. One could surmise that King Hammurabi in ancient Babylon 3,750 years ago—when he wasn’t working on the first written code of laws—might have called for a “Royal Commission on Food Resiliency.” In those days of the Age of Agriculture, urbanization and increasing population created an urgent need to ensure the safety of granaries lest a crop failure leave the population to starve. If you think through the process of what would have been needed, it becomes apparent that redundancy, diversity, separation of roles and duties, auditing, supply chain management, and concerns with insider threats and corruption—not to mention bugs, mold, and rats—would have led officials of the day to impose security principles that we would recognize as being relevant to information and communications systems today.

During the Victorian era, US and British entrepreneurs created the modern tools and business processes of industrial administration, which spread across the world. In the 1950s and 1960s, we began to automate those processes and replace tools (file cabinets, phones, typewriters, calculators, etc.) one for one with computer systems. With the Internet, we created multipurpose computers and hooked them all together, with the result that we now have a complex, interconnected system involving billions of people and exabytes of information. But in hooking it all together and designing new businesses to take advantage of this cornucopia of connectedness, we chose to ignore the security principles learned in Babylon. No amount of patching and bolted-on cyber security technology will fix this. We need to go back and reexamine what we are doing with this technology, reevaluate the risks and the costs, and reconsider this promiscuous architecture. We must redesign the business processes and build in the risk calculus up front. Crying for the day …

So, what have we have been doing since 1997? We have survived so far, so surely this is not a great concern? We have seen a significant cyber security industry emerge that is particularly good at detecting more bad things of the same type that we have already seen before. I don’t mean to be flippant. This is a tremendous advance that allows well-prepared firms to mitigate more quickly or even avoid some of the most sophisticated threats. And yet, data breaches continue to trend upward in scale. We struggle to understand the full costs of cyber events. And more importantly, the worst cyber security events of each year are growing in terms of impact, in part because we do not use the full range of technologies to secure networks and because we increasingly put more and more important things and processes at risk. The shut down of ports and manufacturing processes during the 2017 Not Petya attack are the most obvious examples. But we also have the US Government warning us about Russian and Chinese efforts to emplace sabotage capabilities within our energy and power infrastructures. We have sophisticated actors disabling the safety systems in industrial systems. What does it take to get our attention? Will we finally notice when the lights go out? Why leave me standing here?

As a whole, the dozens of US Government cyber organizations and thousands of cyber security companies are not keeping up in several critical areas. We lack comprehensive situational awareness (“order of battle” and “rates of fire”). We are unable to say with certainty that adversaries are not in our most sensitive infrastructures, such as the power grid. We do a poor job of documenting damage and understanding costs, limiting the utility of insurance. Each new major incident leads to a repetition of the usual confusion regarding who should play, who we can share with, how we share, and what we can share. We have no idea how we would handle an extended outage of critical infrastructure (with the recent experience of an 11-month power outage in Puerto Rico as a relatively small case in point). Every ransomware event—such as the attack in March against Norsk Hydro and two US chemical firms—demonstrates that we do not have plans and procedures in place for rapid restoration of critical data or processes. Restoration of data or systems could be just the “push of a button.” But we rarely make that investment. I have seen that road before …

Author’s Note: Also see the recent experience with unexpected interdependencies during New York City’s recent struggle with an update to the GPS “calendar” rollover.

We have exerted tremendous efforts to create “partnerships” between government and the private sector, but these largely fall short. Too little information is shared too late. Full stop. We spend most of our time cajoling fellow victims to do better and typically do not have close operational relationships with detailed preplanning with the partners who could actually solve many problems on a bad day, namely the network operators. We talk to all sorts of companies, but it is not an operational partnership. Such operational partners could help with situational awareness, prevention, mitigation, and response in ways that go far beyond current practices. It has been helpful when we have tried this approach, as in the case of the WannaCry response. And yes, DHS leaders understand this, but they have a long, hard road ahead of them. We need to support them.

The DHS National Mission Risk Center has undertaken a new approach to identifying risks that appears to be a major step in the right direction. The Center is focusing on “critical functions” and then identifying the interdependent “processes” that support those functions. This can lead to the identification of relevant organizations, service dependencies, and suppliers whose networks might place the functions at risk. This in turn can lead to risk mitigation strategies and preparation for incident response and restoration. This should not be just for national planning. This same analytic approach should be applied to every enterprise in the government and every part of the private sector, rather than starting from a broad-based attempt to mitigate only technical risks in networks, regardless of their criticality. We cannot afford to protect everything to the maximum degree, so we better figure out what cannot fail. It always leads me here …

We must design risk management and allocate resources with a view toward the potential impact on our business processes. In doing so, we may find something seemingly insignificant is vital to the overall mission and requires significant investment to ensure operational resilience. We need real partnerships that allow us to see what is coming and to have procedures in place that allow for rapid response and restoration. We need research and development efforts that are coherent and synchronized and whose success is measured in terms of real-world objectives for real networks. We need infrastructure road maps to move incrementally from what we know is inadequate to what should be resilient.

Stuff will fail. Someday, 5G will fail. But our society should not. Lead me to your door …

Read more from Thomas Donahue in The Cipher Brief...