In the aftermath of the Defense Advanced Research Projects Agency’s (DARPA) recent AlphaDogfight Trials that saw AI defeat a human F-16 pilot in a simulated dogfight, we spoke with Cipher Brief Expert General Philip M. Breedlove (Ret.) about the future of AI in the cockpit.

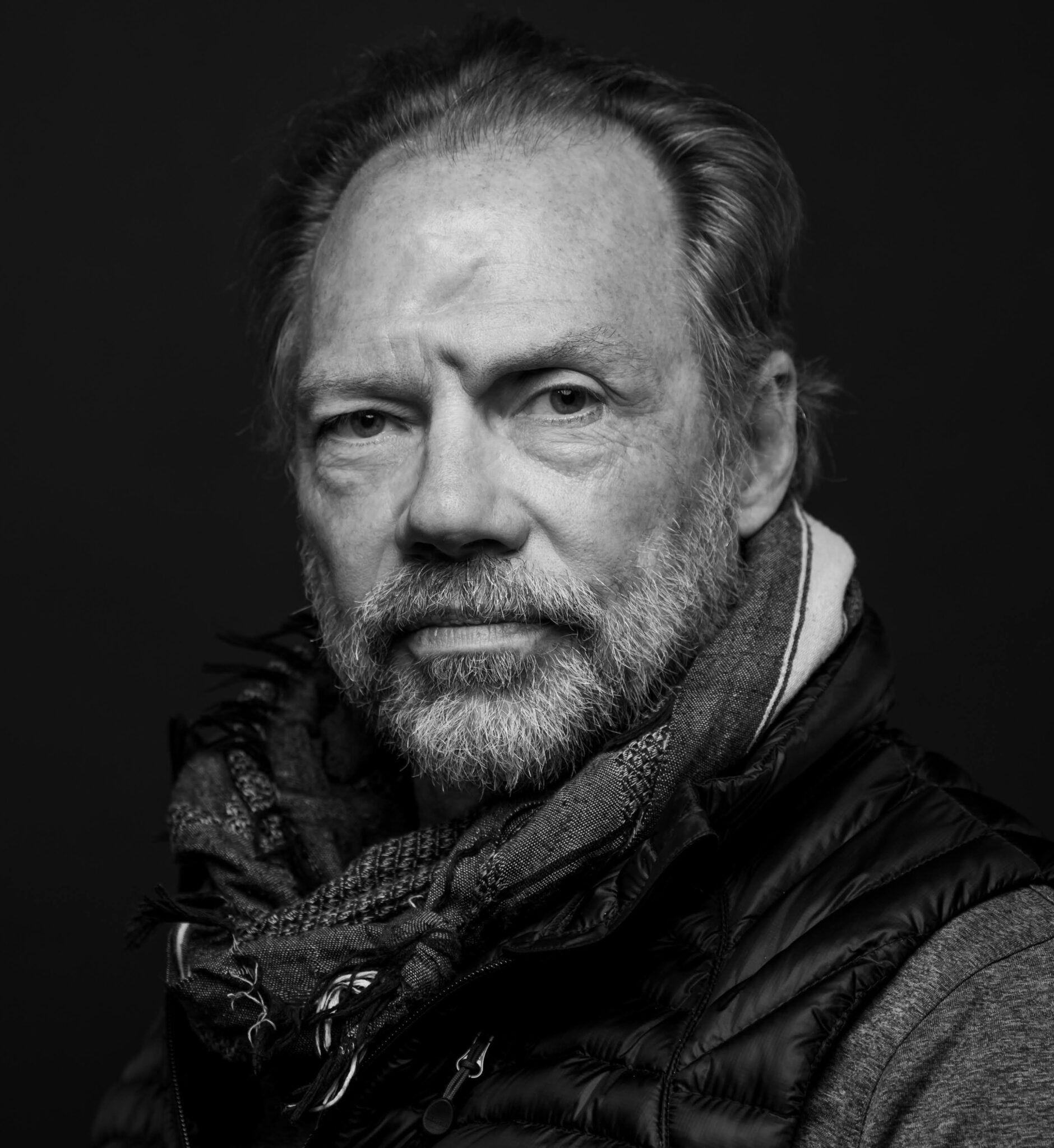

Gen. Breedlove retired as NATO Supreme Allied Commander and is a command pilot with 3,500 flying hours, primarily in the F-16. He flew combat missions in Operation Joint Forge/Joint Guardian.

The Cipher Brief: As you consider the historical arc of combat aircraft from the time of human-only piloted aircraft to the present where some automation has been introduced in the cockpit, how do you view the future of combat aircraft evolution over the next 3-5 years?

General Breedlove (Ret.): Technology is moving fast, but in some cases, policy might not move so fast. For instance, technology exists now to put sensors on these aircrafts that are better than the human eye. They can see better. They can see better in bad conditions. And especially when you start to layer a blend of visual, radar, and infrared sensing together, it is my belief that we can actually achieve a more reliable discerning capability than the human eye.

I do not believe that our limitations are going to be on the ability of the machine to do what it needs to do. The real limitations are going to be on what we allow it to do in a policy format. If we send remotely piloted aircraft (RPA) or autonomous aircraft into the target area and we tell it to, "Go hit the third floor of this building, northwest corner," we can probably build a remote aircraft that can do that and one that is as good and probably better and more reliable over time than a pilot. But when that RPA gets into the target area, will it be able to sense things around the target that would cause a human pilot to come off the target? These can be combatants, non-combatants or other things that were unanticipated. We can teach that RPA to go hit the third floor, northwest corner, second window from the left, and it can do it better than a human. But what it might not be able to do better than a human for some amount of time is to discern that we have other extenuating circumstances, and because of risks of collateral damage, we need to come off the target.

I have a lot of confidence in our ability to develop the capabilities we want in the machine. The real question is, will we allow it to go do this?

We can teach an RPA to understand what an S-400 site is, and using all of the really cool sensors that we have out there in the world now, we can probably teach that RPA to be able to tell between a real S-400 and a fake one or a decoy or whatever, and that aircraft may be able to go out there and do its job as good or better than a pilot in most instances. But are we ever going to get to the point where we actually turn it loose to go do that? Especially if the S-400 is snuggled up against the town, or a hospital, or a mosque? These are the kinds of things that our opponents love to do, because they know how we think and how we function.

The Cipher Brief: U.S. adversaries are also developing this kind of technology. How does that factor in to the equation?

General Breedlove (Ret.): I actually believe we'll see our opponents use it against us possibly before we use it against them, because they're not going to be concerned about collateral damage involved and things like that.

The Cipher Brief: Do you think that using completely automated UAV fighter aircraft will be the way future engagements are fought?

General Breedlove (Ret.): Who knows about five decades from now? I think that there is going to be a point where we may get there. It’s hard to say. There's a lot of imprecise language around this subject, and you often hear people talk about "artificial intelligence," when what they're really talking about is human-assisted decision-making. Humans are training machines to do things, and machines are executing what they've been trained to do, as opposed to actually making independent, non-human aided decisions. I do believe we're in a timeframe now where there may be a person in the loop in certain parts of the engagement, but we're probably not very far off from a point in time when the human says, "Yep, that's the target. Hit it." Or the human takes the aircraft to a point where only the bad element is in front of it, and the decision concerning collateral damage has already been made, and then the human turns it completely over. But to the high-end extreme of, "launch an airplane and then see what happens next," kind of scenario, I think we're still a long way away from that. I think there are going to be humans in the engagement loop for a long time.

The Cipher Brief: Are you confident in the U.S. Military’s ability to maintain overmatch and accurately assess the capabilities in China’s combat aircraft capability as the race for AI dominance heats up?

General Breedlove (Ret.): I’ll lead into this answer by reminding you of the time that former Chairman of the Joint Chiefs of Staff Marty Dempsey warned of the threat to national security posed by the rising U.S. debt. I do not believe that in an unconstrained environment, any nation can still hang with us. If we set our mind to do something, we're as good or better than anybody. Remember, this is demonstrated by the fact that at least one of our main adversaries is aggressively stealing our stuff, and the other one, to some degree, is stealing our stuff, because they know they can do it, and they don't have to pay for it.

In an unconstrained budgetary environment, I will bet on America every single time, period. But in an environment where we're not going to be unconstrained, and we're going to face budget issues and where the enemy can still short circuit their investment by stealing ours, we have issues. I was talking recently with some really sharp people on AI and quantum computing, and I asked them why do others seem better at this than us? And the researchers from this highly respected school said they are not better than us, and we could be better than them. But remember that their nations are investing a tremendous amount of money and research into these things. They're investing and they're moving things from their labs to their military industry. We in the U.S., haven't invested in the same way and we haven’t made the policy decisions to move out and get what we can into the field. So, they are fielding better stuff than we are, but they're not ahead of us in the race to development. Given the decision to be the best in the world on these technologies, I'll bet on America every time. But you have to have the upfront commitment and decision.

The Cipher Brief: What concerns you the most in terms of the AI race with U.S. adversaries?

General Breedlove (Ret.): I've had this conversation with several very senior people in the education and research. We think differently. For instance, when it comes to big data in the U.S., we only have a couple of schools that turn out data engineers and data scientists. I'm referring here to the masters and PhD levels. The way that almost all U.S. schools do this is to start students in mathematics or computer sciences, or some other related research or analysis function, and then somewhere later in your life, you focus on data.

China, for example, is building schools and turning out large numbers of students who start thinking about data in their freshman year, and they stay focused on data throughout their education. A person who starts out in data sees data differently than a person who starts out as a computer engineer, or a mathematician. The people who are really going to understand data and the way it reacts to AI, and the way that we use it in these new quantum computing capabilities are going to be ahead of us because of this great push to understand that data is different than other things and needs to be looked at in that way.

The Cipher Brief: How do you think fighter pilots of the future will think about this data and technology in the cockpit?

General Breedlove (Ret.): The bottom line is, there are these attitudes and pride that gets in the way a little bit. Some folks believe that we're never going to move forward with this technology because fighter pilots don't want to give up the control. I think for most young fighter pilots and for most of the really savvy older fighter pilots, that's not true. We want to be effective, efficient, lethal killing machines when our nation needs to us to be. If we can get into an engagement where we can use these capabilities to make us more effective and more efficient killing machines, then I think you're going to see people, young people, and even people like me, absolutely embracing it.

Read more expert-driven national security insight, perspective and analysis in The Cipher Brief