OPINION — In recent years, senior U.S. Department of Defense leaders have repeatedly emphasized that the U.S. military’s most important asymmetric advantage is not a weapon system or a budget line – it's the ability of our enlisted corps and junior officers to operate autonomously.

This concept, known as mission command, empowers military subordinates to take a commander’s intent and innovate each of the steps necessary to achieve it. This is a philosophy based on trust, initiative, and decentralization, which has repeatedly proven to be decisive on the battlefield.

A recent example of this is how Ukrainian forces, trained extensively by NATO advisers in mission command principles, have been able to adapt tactics in real time, innovate battlefield solutions, and exploit short-lived opportunities much faster than their Russian adversaries. The result is a resilient force able to operate even when communications are degraded and leadership is under fire, which results in dramatically improved tactical success.

This isn’t just a military curiosity. It’s the outward expression of something more profound: a cultural operating system. And that operating system may become just as important in the coming era of AI-enabled competition, particularly between the United States and the People’s Republic of China.

Cultural Operating Systems

In the West, especially in Anglo-American military and corporate cultures, autonomy is rewarded and encouraged. Mistakes made in good faith are often framed as learning opportunities, and initiative is a career accelerant. From early education through professional life, we instill the idea that solving problems independently is a virtue.

In the PRC, the dominant pattern of hierarchical collectivism is almost the opposite. Deep-rooted cultural norms reinforced by the political system strongly discourage independent deviation from the plan. In Chinese culture, errors can carry lasting personal consequences for the individual and even for their family’s honor. In the PLA, stepping outside one’s lane, even for a good reason, can risk professional ruin. In the business and research worlds, the same reflex applies: wait for guidance from the top, execute precisely as instructed, and avoid being the point of failure.

This is not to say there is no innovation in the PRC – there clearly is. But the default behavior in both military and civilian hierarchies is to minimize personal risk by minimizing independent decision-making. That cultural reflex has centuries of reinforcement, and it is amplified by a political system that prizes conformity and punishes deviation, often very harshly.

Of course, these are broad cultural patterns and not absolute, and cultures are adaptable and can change. These deep-seated tendencies influence short- to medium-term outcomes, but things can definitely shift. Western institutions can also lapse into bureaucracy and risk-aversion, while some Chinese firms, particularly in the tech sector, have shown impressive bottom-up innovation. Still, the prevailing norms shape how each system tends to operate under stress.

Sign up for the Cyber Initiatives Group Sunday newsletter, delivering expert-level insights on the cyber and tech stories of the day – directly to your inbox. Sign up for the CIG newsletter today.

Why This Matters for AI

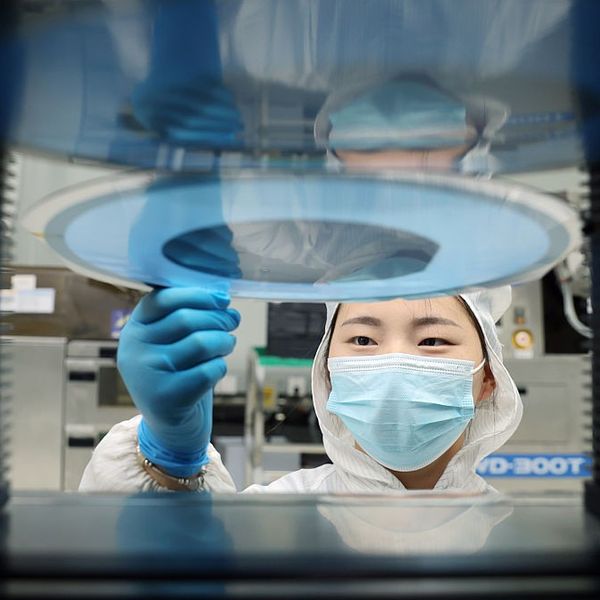

At first glance, artificial intelligence might seem like the great equalizer. With enough computing power, data, and engineering talent, any nation can field cutting-edge AI systems. The PRC has invested massively toward that goal, with clear state directives to become the world leader in AI by 2030.

But AI is not a plug-and-play solution. It is a force multiplier, and what it multiplies is the quality of the human decision-making and adaptation around it. To get the most from AI in complex, dynamic situations, you need people who can spot an opportunity the algorithm missed, reconfigure the system on the fly, and take calculated risks without waiting for permission. While humans remain in the loop, AI will amplify the advantage of cultures that reward initiative.

That is exactly what mission command principles train people to do. And this is where a culture that prizes autonomy has the edge.

If the human operators are hesitant to deviate from a directed procedure, even when the situation demands it, AI’s potential will be throttled. If they fear punishment for a failed experiment, they will never push the system to its edge cases, where the real breakthroughs often occur.

In practice, this means AI doesn’t just enhance analysis, it enhances the speed of adaptation. Environments that empower fast, local decisions will see AI act as an accelerant, while those that centralize approvals will see it act more like a bottleneck.

The Battlefield as a Laboratory

We’ve already seen how this plays out in the military sphere. Ukrainian small-unit leaders have improvised drones for new missions while in the field, invented new countermeasures to defeat Russian electronic warfare, and adapted tactics mid-battle without waiting for top-down approval. In many cases, these adaptations have been faster and more effective than any centrally directed plan could have been.

Contrast that with the PLA’s tightly scripted training patterns. Western observers note that even in exercises designed to simulate chaos, Chinese units tend to execute along predetermined lines. The result is a force that can be formidable when executing a well-prepared plan, but struggles to improvise when reality diverges from the script.

As an old American military quip puts it: “If we don’t know what we are doing, the enemy certainly can’t anticipate our future actions.”

In intelligence, logistics, and cyber operations, AI can identify anomalies or even propose courses of action, but deciding what matters and how to respond in the context of a broader scenario is still a human responsibility. Nations that push those decisions to the edge will move faster and adapt better than those that force every choice through a centralized chain of command.

Essentially, in environments that value autonomy, AI shortens the OODA loop, turning rapid detection into rapid adaptation. In centralized systems, where decisions must wait for top-down approval, AI risks becoming just another layer of data without adding real speed or agility.

Are you Subscribed to The Cipher Brief’s Digital Channel on YouTube? There is no better place to get clear perspectives from deeply experienced national security experts.

A Whole-of-Society Dimension

The connection between military doctrine and societal behavior shares common roots, but is not a one-to-one relationship. The same cultural norms that encourage or discourage initiative in uniform tend to shape how individuals approach risk-taking in business, research, and public service.

Underlying culture shapes how societies approach research, entrepreneurship, and governance in all facets of life, not just military decision-making.

In the U.S., a 24-year-old engineer can quit their job, raise venture funding, and challenge an industry incumbent, often with encouragement from former bosses. In the PRC, the same scenario is far more risky, particularly in strategic areas. Corporate and bureaucratic environments offer very little room for or tolerance of bottom-up innovation.

Of course, there is nuance here. Within the West, different approaches are also potentially traced to cultural considerations. For example, the EU's AI Act, which takes effect in 2025, prioritizes "trustworthy AI" and human oversight, which highlights a Western focus that isn’t purely on speed or bottom-up innovation. And China's success in areas like high-speed rail, quantum computing, and specific aspects of space exploration demonstrates a capacity for rapid advancement within a more centralized framework.

These cultural and social differences subtly shape how cultures use AI, and therefore how quickly each side can integrate AI into complex, adaptive systems.

Strategic Implications

If we accept the idea that cultural operating systems can provide a strategic advantage, several implications emerge:

● Double Down on Autonomy in Training

○ The U.S. and allies should continue to train military and civilian leaders in decentralized decision-making, making it a core competency in both defense and public-sector AI adoption.

● Export the Model

○ Just as NATO’s training transformed the Ukrainian military, mission-command-style education can be exported to partners and allies to improve their adaptability in both military and civil sectors.

● Guard Against Erosion

○ Few things erode autonomy over time like bureaucratic creep and risk aversion. Acquisition delays and corporate risk-management cultures within military commands are constant reminders that this advantage can be lost. Maintaining this advantage will require conscious leadership commitment to rewarding initiative and tolerating (or even encouraging) smart failures. The tech industry has embraced this approach with its “fail early, fail often” mantra.

● Leverage in AI Partnerships

○ When collaborating internationally on AI projects, the West can provide not just technology, but the organizational models that make technology effective under stress.

The Long Game

None of this is to underestimate the PRC’s capacity for technological progress. Beijing can and does produce world-class engineers, scientists, and technologists. It is investing heavily in AI research, infrastructure, and talent. But the cultural and institutional habits that govern how that talent is used are much harder to change. Culture is not destiny, but it is sticky. In fast-moving crises, ingrained reflexes shape how organizations actually perform under stress.

For NATO and Western allies, this is an opportunity. In an era when many advantages are fleeting, cultural operating systems are deeply rooted and can be a durable source of strategic differentiation.

The AI race will ultimately be measured in how quickly organizations can adapt, iterate, and exploit the unexpected. In that regard, the West’s culture of autonomy may prove to be its most powerful and enduring advantage.

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals.

Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief