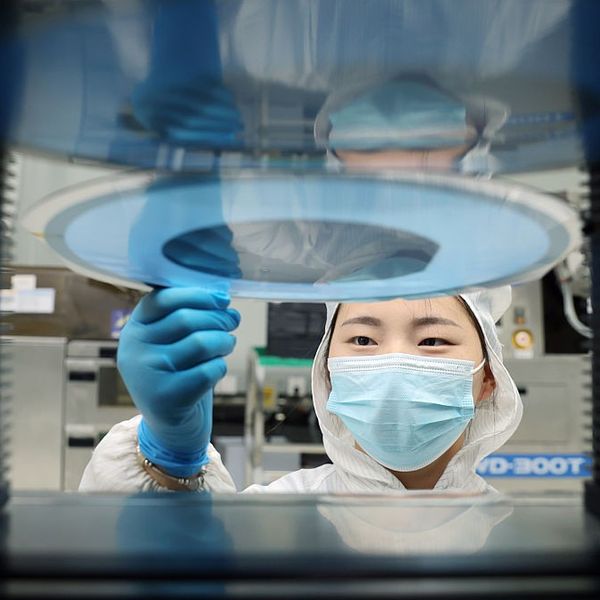

EXPERT Q&A — The Paris Artificial Intelligence (AI) Action Summit featured tech and policy leaders shifting towards an enthusiasm for the unbridled development of AI, rather than a focus on what some called an “AI doomerism.” This was seen most clearly in the U.S. and U.K.’s refusal to sign the final communique of the summit, which endorsed “international governance” of AI to ensure the technology is “open, inclusive, transparent, ethical, safe, secure, and trustworthy.” The declaration clashed with concerns that onerous regulation would impact competitiveness in the AI race — which is heating up with recent breakthroughs such as China’s DeepSeek new model.

Attendees at the Paris summit echoed this sentiment – perhaps none more so than U.S. Vice President JD Vance, who said, “I’m not here this morning to talk about AI safety, which was the title of the conference a couple of years ago. I’m here to talk about AI opportunity.” E.U. President Ursula von der Leyen signaled that even Europe, which embraced AI regulation early on, is shifting course, saying, “This summit is on action, and that is exactly what we need right now. The time has come for us to formulate a vision of where we want AI to take us as a society and as humanity, and then we need to act and accelerate Europe in getting there.”

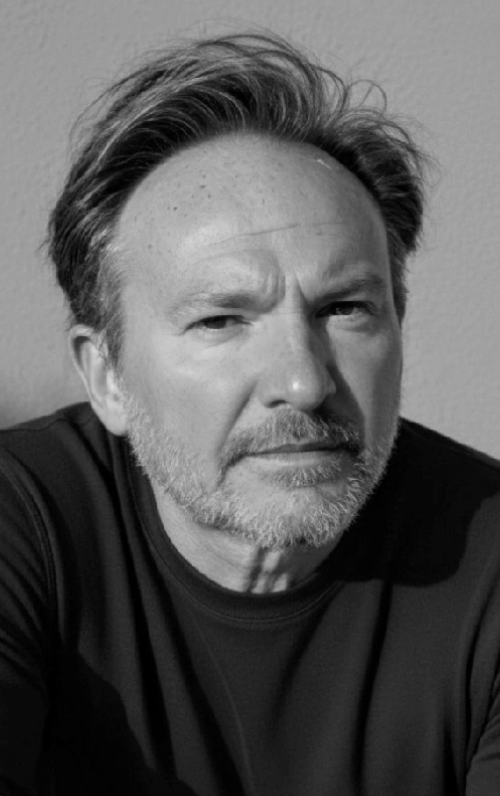

Cipher Brief Managing Editor Tom Nagorski spoke with Colin Shea-Blymyer, a Research Fellow at Georgetown’s Center for Security and Emerging Technology, to discuss key takeaways from the summit. Shea-Blymer, who attended the Paris gathering, described how “risks were sort of pushed off to the side,” but said that a focus on AI safety and competition can still coexist. He added that “the nature of politics” may prove as much a driver as technology, in terms of where AI goes next. Their conversation has been edited for length and clarity.

Nagorski: It seems the summit was a tug between the concerns that have been expressed for a long time about the safety of this wild new frontier of technology – and the amazing opportunity. Is that the framing you have experienced there?

Shea-Blymer: I think that is largely correct. We have so much to gain from a technology like artificial intelligence, and there is a competition going on about who can make the best, the most powerful artificial intelligence technologies. In the meantime, concerns about what these powerful technologies can do not for us but maybe to us have been growing for a number of people, especially some people who have been in the space of AI and computer science for quite some time. So I agree that there is a tug of war here.

At the AI Action Summit itself, what we've seen has been mostly an industry focus. It's been focused on the opportunities, what AI can do for us. I personally attended quite a few official side events that were focused more on the risks. Given that these were side events and the risks were sort of pushed off to the side and in the middle in the focus was the opportunity, I think that's where I would say that tug of war turned out.

Nagorski: One report said that red lines around AI were a very big focus at the last summit in Seoul, and at this one, not so much. There's also a fair bit of focus on Vice President Vance's appearance, and his statement that the U.S. is not interested in talking about AI safety. We're here to talk about AI opportunities. How was that received?

Shea-Blymer: There is, I think, sort of an international concern about what the risks are of AI and there is appreciation that it requires international coordination. This was put really in strain with Vice President Vance's remarks about pushing safety to the side. It's really hard to drive international coordination around these identified risks from AI when we have a vice president saying we're sidelining the risks. Where's the incentive for doing international coordination when we have a vice president saying we don't need to coordinate on this?

Looking for a way to get ahead of the week in cyber and tech? Sign up for the Cyber Initiatives Group Sunday newsletter to quickly get up to speed on the biggest cyber and tech headlines and be ready for the week ahead. Sign up today.

Nagorski: The UK and the US did not sign that final communique basically for that reason. So can the concern about the risk side not coexist with the dashing ahead, seize-the-opportunity side? Surely there's some common ground in the middle?

Shea-Blymer: I agree, and I'm sort of disappointed that we're not having the discussion about how we can have safety and innovation at the same time.

One of the techniques that we have been using in the past to make AI systems like Chat GPT more human-like, and be more cognizant of human needs and desires, like not talking about building bombs, is this technique called reinforcement learning from human feedback. You ask it, “Hey, tell me how to build a bomb.” And then it says, “Here's how to build a bomb.” And then you say, “Bad robot. That's not how you should respond to that question. Respond differently.” And it learns over time to not tell people how to do dangerous things. And this technique has worked really well. And this is a safety technique that we have used.

More recently, we've used reinforcement learning not on getting these large language models to obey these sorts of behaviors that we humans want to impart, but to be better at thinking, to think in steps and then choose the steps that most likely lead to a solution to a given problem. This is the technique that was used to train systems like OpenAI's 01 and 03 models and made the news with DeepSeek's R1 model. So some of the techniques that we are using for safety have turned into techniques for just developing capabilities in these models. I think it's really important to think about how there's interplay and interaction between these at the very technical level.

And this is to say nothing about the broader ecosystem of AI. People wouldn't be flying on planes if we thought that they had safety issues, right? And the science of building planes is much better understood than the science of building safe AI systems.

Nagorski: What concrete steps, if any, were taken at the Paris summit in the area of AI governance and regulation?

Shea-Blymer: A lot of the concrete steps happen behind closed doors. As an academic, I can speak to where we are trying to develop new policy ideas that can be adopted — whether or not they will be adopted is a different question. But there have been growing calls for better inclusion in AI technology. One of my hobby horses is better transparency and communication around the testing and evaluation of AI systems. It's hard to tell if one AI system is safer than another based on the sorts of tests that we're doing right now, and especially based on the sorts of things we communicate about those tests. And then there's been a lot of work on developing mechanisms for reporting incidents of AI harm or AI risk. And so that's an area actually that I think has gained a lot of popularity and has seen some pickup at the event.

The intersection of technology, defense, space and intelligence is critical to future U.S. national security. Join The Cipher Brief on June 5th and 6th in Austin, Texas for the NatSecEDGE conference. Find out how to get an invitation to this invite-only event at natsecedge.com

Nagorski: You referenced the recent DeepSeek breakthrough. To what extent do you think DeepSeek and China generally inform the side of the ledger where they're saying, No, forget the guardrails. We need to go fast because otherwise they're going to run far past us.

Shea-Blymer: That is a core part of what we can call diplomatic strategy there. I think that this concern that there is an “us-versus-them” perspective really drives this race. I think it's really important, though, that we don't let ourselves get blindsided by the risks.

Nagorski: What worries you going forward on that side? What do you think people aren't worried enough about?

Shea-Blymer: I think there's many dimensions. On one hand, we've already seen some risks from deep fakes and the like. And then on the more extreme ends, there have been reports from groups like Apollo Research and Palisade Research about AI systems that have been informed that they might be replaced. And then these AI systems act in a way that tries to make them sort of resist being erased, resist being deleted. And then there was a recent report out of China actually suggesting that some of these systems can in fact replicate themselves, effectively copying the core of the AI model onto another system. This is a real concern, this threat of replication and loss of control.

So on one hand, we have evidence right now of misuse of AI systems. And on the other hand, we have these fears and these threats of systems that we don't understand well enough to control. And in between, there's a whole range of issues, even not on these axes. As I mentioned earlier, there's growing calls for better inclusion in the development and use of AI. Right now, many large language models are not well designed for languages that don't show up very commonly on the internet. And so there are people who don't speak the major languages of the world that are being left out of the AI revolution. This is what I've heard Nobel laureates in economics speak about: there's likely going to be widening gaps in pay and in economic opportunity based on just how we have been developing the technology. And so the threats are multivariate and difficult for us to grasp when we are hurtling headlong forward into a regime of compete and only compete.

Nagorski: This may be hard to answer given the nature of the technology, but what do you think the focus will be at the next AI summit?

Shea-Blymer: I don't think it's only the nature of the technology. I think that the nature of politics as well has really shaped the conversation. If you had asked me six months ago, I would have expected harms and risks to have continued to be a big part of this conversation. In fact, I began my AI policy career in 2019, with Donald Trump's first executive order on artificial intelligence, where he called for regulation and standardization around AI to drive forward the safe deployment and development of artificial intelligence. It was an executive order about competition, but it was also an executive order about safety. Those two existed simultaneously in 2019. Six years later, we have lost the safety component, partially, in response to the politicization of the concept of AI safety, not just because of the sort of the first order cause of an increasingly competitive AI market. And so these political factors drive the conversation just as much as the technological factors.

I think that if we discover more harms, if we really have an AI-driven catastrophe, a plane crash of AI systems, we might then reconfigure the political discussion. And it's really hard to make those sorts of predictions when it's not only a rapidly changing technology, but also the political environment around it is very fluid.

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals. Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief