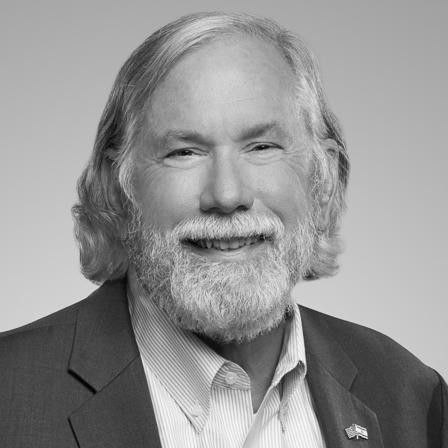

EXPERT Q&A — Cyber Initiatives Group Principal and Former Director of Signals Intelligence at NSA, Teresa Shea sat down this week with Dan Geer, a Senior Fellow at In-Q-Tel, where he also served as CISO. Geer has contributed much to the understanding of cyber and risk during his decades-long career. The Cipher Brief is pleased to bring you their exclusive conversation about cyber, risk and the rough road ahead.

Teresa Shea: Hello, my name is Teresa Shea, and I am super excited this morning, to be talking with the famous Dan Geer, who I consider to be one of our very few national assets in this country. I first met Dan when I worked at In-Q-Tel, a strategic investment firm for the intelligence community, back in the 2015 timeframe. He is now a senior fellow at In-Q-Tel as a consultant, but he was the CISO there for many, many years, and he's currently on a small farm in rural Tennessee, where his only phone is the landline. So we're doing this via audio, but I certainly hope you can all enjoy some of the commentary from Dan. He's done so many things in his career, and he's just a famous, I think, computer security analyst and risk management specialist. He is recognized for raising awareness of critical computer and network security issues before the risks were really widely understood, and what I consider to be groundbreaking work on the economics of security.

He's a brilliant technologist, but also a profoundly deep thinker, and he has just been an invaluable mentor to me and so many others. He received his Bachelor of Science in electrical engineering and computer science from MIT, where he worked on Project Athena, a campus-wide distributed computing environment that was launched back in 1983, and received a Doctorate of Science and Biostatistics from Harvard. And he's just worked for a variety of companies, and has so many firsts, to include being the first information security consulting firm on Wall Street, and the first academic conference on electronic commerce and mobile computing. Back in 1998, he delivered the speech that really changed the focus of security, titled Risk Management is Where the Money Is. He was the president of USNIC's Association, and author of numerous groundbreaking works, just to name a few, Cybersecurity and the Cost of Monopoly, 2003, and the Economics and Strategies of Data Security.

It's a lifetime Achievement award, USNIC's Association, and I'm very proud to say, an expert for the NSA Science of Security Award from 2013 to present. He's six-time entrepreneur, five times before Congress, of which two were as lead witness. And Dan, I know I've left a lot out, is there anything that you would add to that to just start as an introduction? I know today, you're, and have been for a long time, living on your farm there in Tennessee, and you're a proficient small farmer and beekeeper, so I'm just thrilled to have you. Welcome.

Dan Geer: Thank you for the introduction. I have nothing to add. Introductions always make me uncomfortable, so thank you for doing that.

Shea: Well, you've certainly earned it. So Dan, let's talk with your most recent article, which appeared in Lawfare, It's Time to Act, and you really kind of started with, "We've known for 20 years it was coming, is this crisis too good to waste?" And I think you're referring, this was right after the CrowdStrike software update error, so in just reference to that, can you share with us what you mean by "We've known it was coming for 20 years?"

Geer: Sure. I'm going to be a little pedantic here. In my view... Well, let me just say everything I'm about to say throughout this interview is, quote, in my view, unquote, so we won't waste time saying that over and over. To me, the wellspring of risk is dependence. You're not at risk from something unless you're dependent on it, and that can be both physical and metaphorical, like peace. You can be dependent on peace. But because dependence is transitive, so is risk. That you may not yourself depend on something directly does not mean that you do not depend on it indirectly. We, or at least I, call the transitive reach of dependence as interdependence, which is to say correlated risk. In society today, the biggest correlated risk is that of the internet, except perhaps climate, but we'll ignore climate for this discussion. Our concern then is unacknowledged correlated risk. The unacknowledged correlated risk of cyberspace is why it's capable of black swan behavior. Unacknowledged correlations contribute, by definition, to heavy tails and probability distributions of possible events.

Nassim Taleb is by far the most widely read person on that topic, so I will quote him. "As complexity hides interdependencies, ergo complexity is the enemy of security. And in particular, complexity characterizes the behavior of a system or a model whose components interact in multiple ways and follow local rules, meaning there is no reasonable higher instruction to define the various possible interactions." So, when I said I knew it was coming for 20 years, what I was talking about was we had been watching a buildup in interdependence, which most days, is a lovely thing, don't get me wrong. But that buildup sets the stage for the occasional failure of a what seems like a small part, to propagate to the failure of much larger parts. Those who are devotees of the cartoon XKCD know what cartoon I'm speaking of right there. But that interdependence we have known has been growing, and we knew that it was possible for it to produce black swans, and Crowdstrike was one, but only one.

Shea: Yeah. So it doesn't bode well for our current state, because we've certainly made our systems interdependent and complex. In the article, you make the point up front, that attacks differ crucially in pre-event protection planning. Could we avoid these? You have any advice on how we might avoid those events?

Geer: Well, in my view, there are only two risks that rise to the level of national security. One, is single points of failure over something that is absolutely essential, like for example, the domain name system. The other is anything that sets off cascade failure. And we know that, as I said, complexity hides interdependence, and unacknowledged interdependence is a source of black swan events, including cascade failure. Since the most important defense against cascade failure is that cascade failure is ignited by random faults and quenched by redundancy.

In other words, if a random failure, someone mows down a telephone pole, say, someone digs a fiber cable out of the ground with a backhoe, say, those are mitigated and protected against by having redundancy. However, if it is not a random event, but rather it is a state-level actor, or sentient opponent being the most important part of this, then redundancy can actually be a force multiplier for the attacker, and that was what I was getting at. The mythical prudent man plans for maximal damage scenarios, not for most probable scenarios. And in that sense, we need to preserve, to the extent we can, uncorrelated operational mechanisms. In other words, if this fails, it doesn't mean that that fails. Or if this fails, it means that that other thing which depended on it can go to a mode of diminished operation. That's the difference, is if your opponent is sentient, which is the case in many circumstances, then you have a different suite of protections you need to think about than if your opponent is stray alpha particles or mowing down phone poles.

Shea: That's kind of scary. I mean, when you think about the prudent man planning for maximal damage scenarios, when I think about maximal damage scenarios, I often thinking about cyber warfare for example. And I know you've written forwards for numerous books, but one of them is Inside Cyber Warfare by Jeffrey Caruso, and you make the statement in there that cyber war is a reality. Can you expand on that a little bit for us, on cyber war implications?

Geer: Well, Teresa, as you know, I don't have actually, a military background, so please apply a skepticism filter here. But I strongly suspect that of all the tools available to the military, short of nuclear arms, that cyber has the greatest force multiplication. Note, as sort of a current events marker for this, the big reinsurer, Munich Re, just announced that he was willing to walk away from the cyber insurance business because it has decided to exclude cyber war from its entire portfolio. The quote that I will give you here is directly from them, "If we need to give up the entire business, we are prepared to do so because we simply think we cannot ensure cyber war. Losses from cyber war could be a systemic event that neither the insurance nor reinsurance industry could survive."

So, I would say that in corroboration to my opinion, this is a decision taken by a knowledgeable and important player. The reinsurance industry is where really, the rubber meets the road. And the fact that as they say, "If we need to give up the entire business, we're prepared to do so," I think that tells you that there's a reality behind this that isn't just a stray opinion.

Are you Subscribed to The Cipher Brief’s Digital Channel on YouTube? Watch The Cipher Brief’s interview with CIA Director Bill Burns as he talks about The Middle East, Russia, China and the thing that keeps him up at night.

Shea: I saw that, and we still don't really have a good definition of cyber warfare, in that we could determine the actual cause of a cyber attack and characterize that as warfare, versus espionage, for example. And yeah, this will be interesting to see how other insurance companies and reinsurance companies follow suit, so excellent point. Thank you for that. So just recommendations you have on cybersecurity books that you think need to be read by the community.

Geer: I don't read a lot of cyber security books actually, which sounds grandiose, and I certainly don't mean it that way, it's just-

Shea: Well, that's because you're busy writing them.

Geer: No, not really. But let me recommend a relatively new, months-old book by MIT Press. The author is John Downer. You can get a free e-book, you'll have to look at MIT Press's website, but the title is Rational Accidents, and the subtitle is Reckoning with Catastrophic Technologies. I think that a cybersecurity-knowledgeable reader would find themselves, as I have, noting frequently, in that book, "Well, what if," and then having a cybersecurity scenario to go with the what if related to points that the book is making. In other words, this is more risk-centric than it is cyber-centric, but I don't view those as being at odds with each other.

Shea: Yeah. And the other one, Dan, I was going to ask you about was the arguments that you and Andrew Burt made in Flat Light, which I thought was a great analogy to the airline industry, and I love the fact that the pilot was female. Can you just talk a little bit about that?

Geer: You're entirely welcome. If you didn't know that, I have four daughters and no sons, multiple aunts and no uncles, and so perhaps that explains something.

Shea: I really enjoyed Flat Light as well, I thought it was great, well done. Let's go back, let's go back in time just a few years here, back to 2016, where I thought you wrote an excellent piece on cybersecurity for the 45th presidency. Now we have the same president, Donald Trump, as our 47th president, I'd like to revisit some of your recommendations. The first being does cyber offense still have a structural advantage over cyber defense, especially given the advancement of AI and the availability of AI now?

Geer: Well, I think it does. I'm sure that this is a question that your former colleagues at the agency think about a lot, if you know you're at a strategic disadvantage, there are obvious decisions that come from that. If you think you're in a position to possibly have a strategic advantage, there are other decisions that come from that. And again, I am sure I'm not the only thinker on this topic. But in answer to your question, does offense still have a structural advantage over defense? I believe it does. But we will soon, if we're not already in it, we'll soon enter a, shall we say, a natural experiment of applying machine learning to this problem.

There was a recent announcement that machine learning tools had detected a vulnerability previously undetected by people. That is, I think, just a marker in time. I don't think it means anything that we found, one, I don't think means anything. But as a marker in time, was, "Well, when did it start that we could find them this way," and that would be now. But the application of AI may help us work on what I view as the structural conundrum in cybersecurity, were we to have complete knowledge, would we find that vulnerabilities are sparse or dense? If they are sparse, then the treasure and effort spent finding them and fixing them is well spent so long as we're not deploying new vulnerabilities faster than we're curing old ones. So if they are sparse, go get them. If on the other hand, they are dense, then don't bother finding them. It's a waste of treasure, and it misinforms you as to whether you're getting safer or not.

If we cannot answer whether they're sparse or dense, then I think we need to default to instant recovery, which is to say driving the mean time to repair to zero rather than trying to default to perfection, which is driving the mean time between failures to infinity. So the dynamic there is between do we try to prevent these, or do we try to have an environment in which, when they occur, they are effectively meaningless? If we don't know the answer to sparse versus dense, and we take the prudent decision to plan for maximal damage, then we have to go in the direction of mean time to repair to zero. That's the logic I have, anyway.

Shea: I do think the vast majority of experts agree with you in that, especially given we're increasing the size of our attack surface faster than we're able to build in the defenses and the security that we need. And so being able to solve the problem immediately upon discovery of the problem helps to achieve that mean time to repair to zero. But it's hard, right? Let's face it, there's always a vulnerability to be exploited potentially, and so addressing it is hard. So I know you've done a lot of work, and it's been a while since, but your optimality and efficiency work, countering robustness and resilience, and the balance in that, and I think you did a talk several years ago, just tell me, have we resolved this? Do you think we're at "We know the answer?"

Geer: Well, I think the question of whether going for optimality and efficiency, whether that is risk-producing, I would argue that that is a settled issue, that it does, that going for optimality and efficiency does create risk. When I was an undergrad, the rule of thumb was that 40% of your code should be error handling. God only knows what the rule is now, or if there even is a rule, or if that was my misinterpretation of what the lecturer was saying. But let's suppose that 40% of your code ought to be for error handling, that is a recognition that efficiency is not everything.

Sorry. It seems to me that the fragility problem, which of course, was addressed well by Talib in his book, Antifragility. I think that is a marker for, in effect, how well are we doing. This is a security metrics problem, if we had a measure of how fragile a software system is, that would be extremely useful, even if the measure is not particularly good, if at least it produced an ordering. In other words, an ordinal scale for fragile would be a very useful thing to have. I don't know how we quite get that. I think it has to come out of the complexity work, which is why Santa Fe was interested in this even 10 years ago. But that is where I come down on this, that it is if not true, at least a reasonable assumption to make, that focusing solely on efficiency generates risk.

Shea: There was an argument there that the making it more efficient makes it more brittle and fragile, and less robust. So yeah, well said. So I have to ask about attribution, I mean, that's a continual challenge for the national security space. Are we making progress? Is there any hope we're going to get to an attribution solution?

Geer: I don't want to sound trite here, but I'll come back to you, and say, is there any hope for geocoding the entire internet? Is there any hope for applying the principles of Westphalian sovereignty to network communications? By which I mean under the Treaty of Westphalia, anybody shooting over the border, over my border toward you, is my fault, regardless of whether or not I knew they were going to be doing it. That idea of responsibility for emanations, whether it's bullets or network packets, I can't imagine that you would get the most troubling countries to agree to that, but it's not a bad principle.

So, your question is, "Is there hope?" Some years ago, there was a proposal that I don't think went anywhere, but it was that a small fraction of packets passing through any top-tier backbone provider would be marked, that say one in 1,000 packets, or one in 100,000 packets, would have a mark added to it. So that if you had, say, for example, a denial of service attack, you would still have enough evidence to point to where it came from, even if the vast majority of the packets you received were not so marked. It was an interesting idea, I thought. It was a little bit like adding a radioactive tracer to the water in the bilges of a tanker so they can find a little bit of it somewhere, you know the tanker was there and discharging water. I think in other words, the answer to your question is, yes, there are technical solutions. I don't know how to get from, yes, there are technical solutions, to an installed base, but I think that's where the challenge is. I don't think it's on the technical side.

Everyone needs a good nightcap. Ours happens to come in the form of a M-F newsletter that provides the best way to unwind while staying up to speed on national security. (And this Nightcap promises no hangover or weight gain.) Sign up today.

Shea: I consider that good news, although your two examples there, I don't see either one of those really happening, so those are great examples. So let's talk about secure software. We know secure software is hard to produce, and companies need to update. And we all experience that if we're online, those frequent updates. How do you accomplish those, secure software and frequent updates? Especially in that, I think you have said that software should be lightly modified. What are your thoughts on that?

Geer: Well, yes. Auto-update, as you know, should be thought of as dual-use. Wasn't the NotPetya problem instigated by a auto-update, a banking software to Ukraine, something obscure like that is my point. Where you say, "Well, that can't be bad," the answer is just stand back and watch. That problem of auto-update, and I say this reluctantly, and I mean that, caused me to come to the conclusion that auto-update is probably not a good idea for everyone. For some entities, it probably is a good idea. For some entities, staging it to an internal test bed, and then, and only then, staging it to your own install base. And for other entities, it's probably not a good idea, particularly if that entity is an obvious target.

Brian Krebs and I both agree on that, for what it's worth. I wrote about it, and oddly enough, did not receive any hate mail, where I was fully expecting to receive some hate mail. It didn't happen. On the other hand, this all may be irrelevant now, or becoming irrelevant, if we are entering an age of AI, generative AI in particular, whereas software is supposed to be deterministic by and large. You put in this input, you get out that input, you turn this on, it goes on, you turn it off, it goes off, et cetera, et cetera. Generative AI is stochastic all the way down, and if you turn off the stochastic part, you don't get the kind of value-add that you otherwise would expect. And as such, we may be entering a situation where this worry about auto-update is not a "Do I do it or do I not," it's "I'm doing it all the time, and I can't see it because it's stochastic." And that, I think, is a really important question, and I dearly hope that careful and expert minds are studying this. If they are, I suspect it's inside the big providers. So inside Anthropic and OpenAI and Google and Microsoft, and so forth, I suspect their research groups would be at least thinking about this issue, which is the non-determinism being a useful feature, but something that runs counter to the idea of debugging it really.

Shea: Thank you for that, Dan. And I hope as they study it that they are going to publish some of their work on that, because I think we'd all welcome that.

Geer: Yes, indeed. And that's where, in a way, back to the book I recommended on rational consequences before, John Downer's book, no airline company wants to hide equipment failures from others. Maybe there was a time they did, but now they don't, and you could go on and on, and down that path of reasoning.

Shea: Yeah, a lot to be learned there, I think, and applied, lessons applied. So for new software installations, you have indicated in the past, that they should be damped down until live experience gives us some situational understanding. We're in a very fast-paced world, I mean, just to the point of what we were just talking about with now getting to the point where we have generative AI, and is it practical to damp down new installations?

Geer: Well, the one analogy I can point to is the regimen involved in doing clinical trials for new pharmaceuticals, where you have to show safety and efficacy, and you have to make special cases, say, for example, nursing mothers, and where contraindications have to be well understood, and so forth. And let's be clear, that is not cheap, it is not voluntary, and it produces various kinds of backlash from people who say, "Stop making them test it, just give me the drug." Well, frankly, that's likely to show up a lot coming up here. So, it seems to me that if we are going to put, well, frankly, new dependencies into the environment, that they need a period of test that is realistic. And if there's anything I've learned about software, it's that test is hard. And now that we're into the AI arena, I think it's only possible to test in live action. I don't know that you can set up a genuinely representative sample of what happens at world scale when you introduce ChatGPT, or whatever. I think that that problem of you can't get a representative, informative, predictive level of testing without going live. It may erase what I've said in other situations, but that's the conundrum, how do we test? Do we test? What does test mean in a stochastic system, and what does test mean in terms of rollout?

I know that marketing algorithms are constantly fiddled with, and the fact that you and I are both on the same website doesn't mean a damn thing about what, say for example, advertisements we'll see, that it is constant, a rolling of the underlying algorithms. Could we apply that? Is there a lesson to be learned there? And I think you'd have to ask the big providers of that.

Shea: That's fair enough, yeah. So just to follow up though, real quick, on the clinical trials. And clinical trials, let's talk about that in reference to digital installations, how do you envision that? If we were to make that available, doable, if you will, what are your thoughts about how we would do that, in terms of envisioning clinical trials for digital installations?

Geer: I'd like to sort of hold on the answer to that. It's a challenge, maybe I should write something.

Shea: I welcome that.

Geer: I honestly do better sitting in a garret than I do answering a live question of the import of what you just did. But let me say that the idea behind clinical trials is that you start with a controlled environment. This group of people gets the drug, that group of people gets the placebo, the administer of the drug or placebo doesn't know which, they're double-blind. You have stopping rules that say, "If it turns out that we're seeing a huge difference in one group of people versus another, we know to stop the trial because it's either so effective that we need to get the drug out, or it is so dangerous that we need to stop the trial." And all those sets of rules are the result of years of, frankly, occasional failure and learning from failure.

But that idea of an incremental introduction with control over the characteristics of the experiment that you believe could be determinative, like have you ever had polio being determinative of whether or not this anesthetic is bad. I'm just making this up. You need to work up from the, "Well, we covered the most obvious failure modes based on our knowledge of the literature, so now we're going to do something a little more elaborate and look." Or perhaps more to the point, "We're going to do a follow-up. We're going to wait a year and see whether or not your long COVID has gone away," for example. It's not trivial, but we have varnished our technique for doing these over the course of some time. I do hope the new head of the FDA does not screw this up, but it is not for nothing that we have that.

Who’s Reading this? More than 500K of the most influential national security experts in the world. Need full access to what the Experts are reading?

Shea: Aim into that, right. It's not for nothing that we have that, well said. And yeah, I hope you do put sort of pencil to paper on that one, that's as our systems become more complex, interconnected, tech surface growing, we need to think about that. Go ahead.

Geer: There's a nascent group, there's two of them I'm aware of, that are looking at all these questions as a question of public health, which is a slightly different mindset. Medicine says the fact that you have this disease is a tragedy. Public health says the fact that some people have this disease is a tragedy. Public health does not try to cure an individual, it tries to reduce the risk at a population level. And so looking at cybersecurity as a public health question is something that I'm aware of two groups that are pretty active, but small still. This is a new focus for a number of academic and practitioner folks, and the fact there's two of them is probably also a proof that it's something whose time has come. Watch for progress in that space.

Shea: Yeah. Wow, I wasn't tracking that. I wasn't aware of that. And I had not thought about it that way, but that certainly would shed different light on this whole premise. I can certainly see cybersecurity of devices that are implanted, et cetera, has been a long discussion, but it'll be interesting. I'd like to hear more about that, yeah. And then we talked about this zero mean to solving our problems and making things faster to recover. How do we recover faster? What are your recommendations?

Geer: Well, maintaining the ability to operate in a diminished mode strikes me as one of those. If you look at what came out of the financial crisis in 2008, there was the so-called stress testing of financial institutions, where you would say, "If unemployment rises to here, home prices fall to there," four or five markers. If those all occur simultaneously, would you still be solvent? I think we need something similar here. If a third of all network bandwidth goes away, half of all desktops have to be reinstalled by hand. Some suite of three, four, five bad things all occurring at once, could you still operate if you're a major supplier of something or other, whatever it was, if one of the major cloud suppliers, for example, had a significant data breach, where would you be? That's what I'm looking at now.

Frankly, if you're going to have auto update, you, at the very least, need auto rollback, at the very least. And again, I'm not just randomly focusing on this, but in the case of AI, given that it has that non-deterministic quality, do you need a second AI watching the first, that Latin for who watches the watchers. On the other hand, that brings up an interesting question, and that is what's the energy budget for watching an AI? If you have one AI watching another, does it take half again, as much power, twice, 10 times? What are we willing to spend in terms of just the power budget for one to supervise another? And I say that just because the power budget for AI is a topic of frequent conversation these days.

Shea: Yeah, because it's super expensive, right? And I mean, I think you're honing in on this accountability and abuse of power, and how much money do you spend to minimize, and the wrong outcomes there, right? That'll be interesting to see.

Geer: I don't want to go down this rabbit hole, but look at the number of initiatives that have been made to have tracking of something, like tracking a steak in the freezer all the way back to the cow on the farm. That idea of known sources, or know your customer in the banking world, or there's any number of those, where we're trying to say a root cause would be demonstrable in the event of a fast-moving problem, and as such, we would know how to bound the risk.

Shea: Let's come back to this 47th presidency, what are your recommendations that you would make to the incoming president?

Geer: I think most of the ones that I wrote about before, and again, I'll give you that reference, and you're welcome to share it. One of the things that seems to me that we want to avoid is adding to the... What was that Alan Greenspan remark about improper enthusiasm, exuberance, irrational exuberance. That was Alan Greenspan's remark about some financial problem way back when, I would apply it to this. I don't think we want irrational exuberance about cryptocurrency, for example. And I'll put that in terms of a non-political risk analysis, and that is all of them depend on a crypto algorithm of some sort. What if the crypto algorithm fails? Then the cryptocurrency collapses at once, much like the demolition of a building with a controlled explosion, where it's always remarkable to me how they make it fall into its own basement. But you would have that, you would have exactly that. And so having backstop capital, and so forth, and so forth, there's an example of something where I know there's a tendency in the next administration to go in this direction of regularizing cryptocurrency, but I would suggest that irrational exuberance ought to be a watchword for that.

I am not a student of this Teresa, but the idea of defending forward has simply got to apply here. Because if per our previous conversation, if it is still true that offense has a structural advantage, there's just no argument against defending forward that I'm aware of, not at all. If we could make some progress on the questions of liability, I think that would be good. I have been long astonished, and remain astonished, that it seems as if every end-user license agreement, the high order bid on every page is "It's not our fault." And that cannot stand, that symptom cannot stand. Sure, it's not slavery, but where I think that phrase first came up, but it can't stand. That's the level of things which I would hope would occupy the strategic thinkers in and around the White House, and not other questions that I fear are easier to solve. Let me give you a Calvin Coolidge. Of all people, Calvin Coolidge, a quote, "That it is much more important to kill bad bills than to pass good ones," And that might well be the advice I would give the 47th president.

Shea: Yeah, I don't recall that. That's a great quote. That's a great quote. So as we come to the end here, let's just switch gears quickly here, on a couple of things, Dan. Let's talk about the real-life advice you would give those that are entering the cybersecurity field. What would you tell somebody coming into this field today?

Geer: It's complex enough now that serial specialization is only your only option. I am a generalist, and I say that with a little bit of pride, but also completely recognizing that that is only possible for someone who's been here quite a while. My father had a friend who, every day, swam in the East River in New York, and my dad said, "Could I come with you sometime?" And he said, "No, it would kill you. I built up my immunities one at a time, you need them all at once," which was sort of a funny remark of that, but it's similar here. The degree of specialization that is now required to be part of the good side of cybersecurity is, I think, it's fair to say, extreme. And don't start out trying to be a generalist, maybe you can work up to it.

And again, this sounds self-aggrandizing, I truly don't mean it that way. It's just I've been here for enough time that I could learn things one at a time, as opposed to learning 27 things before breakfast, and it's that load that I would suggest. Now it leads you to say, "Well, what specializations should I choose," and that, I would probably counsel each individual differently on. Do you like solving puzzles? Do you like being in the forefront of action? Do you like the adrenaline rush that comes from being an emergency medical technician, for example? I'd like to know more about the person, because I think you have to, as with many jobs, you do a better job if you like what you're doing.

In other words, if it's not a job, it's a calling and you happen to get paid for it, that's preferable, so I'd like to know more about the individual. But the core argument is don't try to be a generalist, but at the same time, try to have more than one specialty. Because the interaction between specialties, in my case, electrical engineering and biostatistics, you might say, "What does that have to do with anything?" And the answer is, "Well, there are some characteristics between engineering and analysis that I would suggest have been useful to me." But that's what I would say, is specialties in two or greater, probably close to two, and don't expect any one of them to last forever.

Shea: Yeah, that's great advice. Play to your strengths, keep learning, right, that's great advice. So Dan, I have to ask you about your life on the farm, which I find fascinating. What's your favorite part of the day?

Geer: Collapse at the end. The most satisfying part actually, is we do have customers. This is a commercial enterprise. It's small. It's small in the sense that I've never actually wanted to have a million dollar combine and 4,500 acres of wheat staring me in the face. I mean, I've never wanted to go at that level, but when your customers come back to you and want more, I find that very satisfying actually, and that's way at the end of the line. In general though, I mean, if you mean the actual farming part, there's nothing quite like seeing that germination worked. I put down a large quantity of clover in some pastures, for example. Clover is notoriously slow to germinate. If you stand there holding your breath, you'll just turn blue. But on the other hand, at the end of a season or two, now you can go back and say, "It really took, and I don't have to worry about it for another two to five years." That's a great thing. I know that sounds dumb but seeing that germination worked is extremely satisfying.

I'm a beekeeper, I'm a commercial beekeeper. Smaller scale again, because again, it's just the family, and there's how much can you do with the family and a couple of tractors, and what can you do is small. But it's not like, "Well, the guy down the road from me has 3000 acres of soybeans." That's perfectly fine, I'm happy for him and his children, may they please keep doing this. But I've never really wanted to do that, I wanted to be small and diversified, so that's what I do. The beekeeping thing is an example of the diversification. For those of you who are interested in it, it is harder than ever, but at the same time, if you have any... Disclaimer again, I don't mean this self-aggrandizingly, but if you have any intellectual curiosity in the lower orders, man, this will satisfy all the intellectual curiosity that you have to apply.

Shea: That's awesome. You have a new essay coming out in January or February. Just as a final takeaway here, what can we look forward to in the new year coming from you?

Geer: Some thoughts about how we no longer have source code, but rather, we have source data and what the implications of that are, and what is likely to be the driver for any data you want in that circumstance.

Shea: I'm excited. I cannot thank you enough. Thank you very much. As always, this was so informative and so brilliant, and we appreciate you. Thank you.

Geer: You're entirely welcome.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief because National Security is Everyone’s Business.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.