The Defense Advanced Research Projects Agency (DARPA) recently hosted the AlphaDogfight Trials putting artificial intelligence technology from eight different organizations up against human pilots. In the end, the winning AI, made by Heron Systems, faced off against a human F-16 pilot in a simulated dogfight with the AI system scoring a 5-0 victory against the human pilot.

The simulation was part of an effort to better understand how to integrate AI systems in piloted aircraft in part, to increase the lethality of the Air Force. The event also re-launched questions about the future of AI in aviation technology and how human pilots will remain relevant in an age of ongoing advancements in drone and artificial intelligence technology.

The Background:

- The U.S. Air Force first fielded drones in combat in the mid-90s in the Balkans

- The first time an armed drone fired a missile in combat was on October 7th, 2001

- Drones are now in use with the Army, Navy, and Marine Corps

- The addition of the Magic Carpet software to the Super Hornet largely automated the process of landing a jet on an aircraft carrier

- Advancements in flight control systems have led to aviation becoming a much safer endeavor and have allowed aerospace engineers more freedom in aircraft design

The Experts:

The Cipher Brief spoke with our expert, General Philip M. Breedlove (Ret.) and Tucker ‘Cinco’ Hamilton to get their take on the trials and the path ahead for AI in aviation.

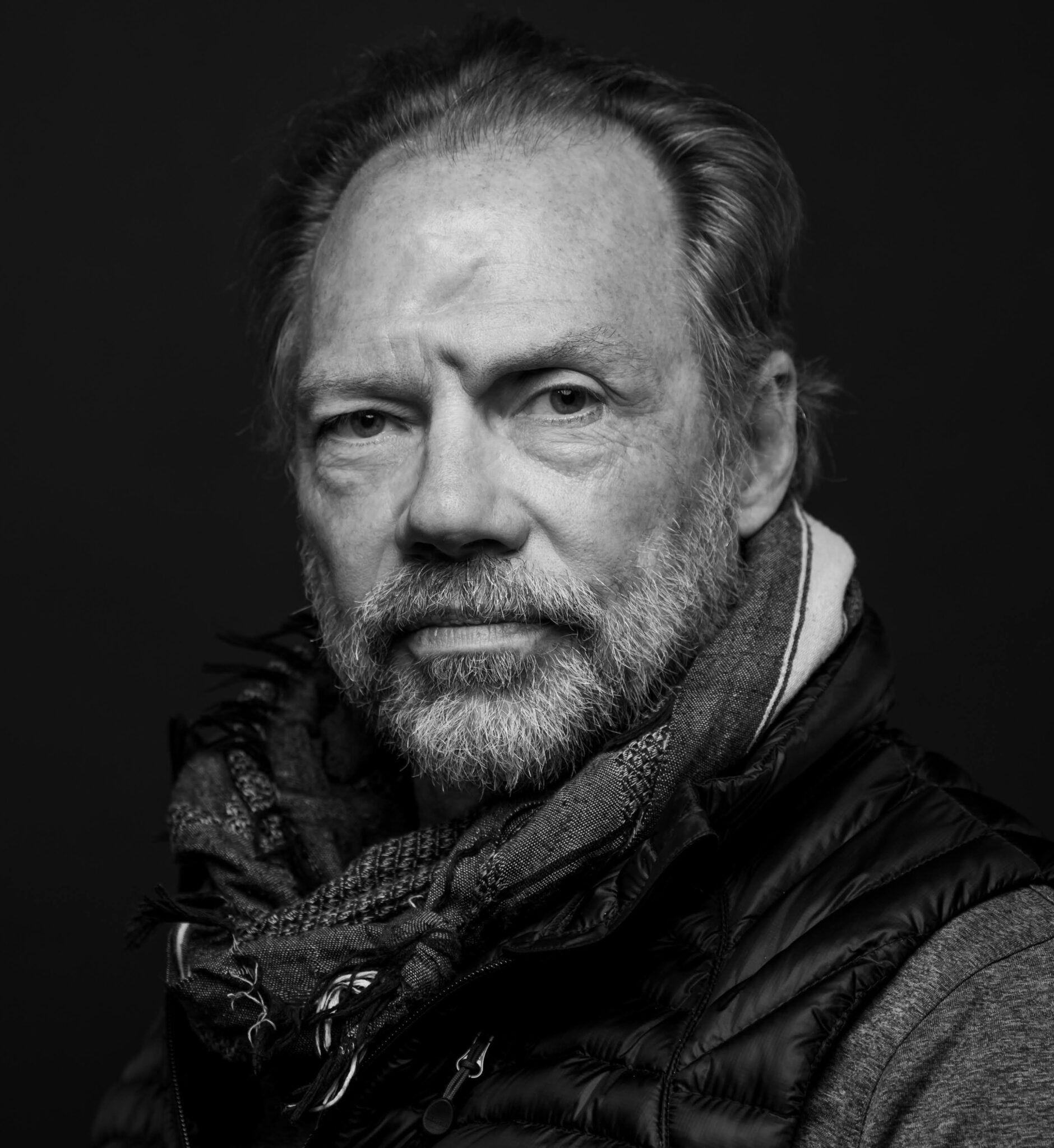

General Philip M. Breedlove, Former Supreme Allied Commander, NATO & Command Pilot

Gen. Breedlove retired as NATO Supreme Allied Commander and is a command pilot with 3,500 flying hours, primarily in the F-16. He flew combat missions in Operation Joint Forge/Joint Guardian. Prior to his position as SACEUR, he served as Commander, U.S. Air Forces in Europe; Commander, U.S. Air Forces Africa; Commander, Air Component Command, Ramstein; and Director, Joint Air Power Competence Centre, Kalkar, Germany.

Lt. Col. Tucker "Cinco" Hamilton, Director, Dept. of the Air Force AI Accelerator at MIT

“Cinco” Hamilton is Director, Department of the Air Force-MIT Accelerator and previously served as Director of the F-35 Integrated Test Force at Edwards AFB, responsible for the developmental flight test of the F-35. He has logged over 2,100 hours as a test pilot in more than 30 types of aircraft.

How significant was this test between AI and human pilots?

Tucker “Cinco” Hamilton: It was significant along the same lines as when DeepMind Technologies AlphaGo won the game Go against a grand-master. It was an important moment that revealed technological capability, but it must be understood in the context of the demonstration. Equally, it did not prove that fighter pilots are no longer needed on the battlefield. What I hope people took away from the demonstration was that AI/ML technology is immensely capable and vitally important to understand and cultivate; that with an ethical and focused developmental approach we can bolster the human-machine interaction.

General Breedlove: Technology is moving fast, but in some cases, policy might not move so fast. For instance, technology exists now to put sensors on these aircrafts that are better than the human eye. They can see better. They can see better in bad conditions. And especially when you start to layer a blend of visual, radar, and infrared sensing together, it is my belief that we can actually achieve a more reliable discerning capability than the human eye. I do not believe that our limitations are going to be on the ability of the machine to do what it needs to do. The real limitations are going to be on what we allow it to do in a policy format.

How will fighter pilots of the future think about data and technology in the cockpit?

General Breedlove: Some folks believe that we're never going to move forward with this technology because fighter pilots don't want to give up the control. I think for most young fighter pilots and for most of the really savvy older fighter pilots, that's not true. We want to be effective, efficient, lethal killing machines when our nation needs to us to be. If we can get into an engagement where we can use these capabilities to make us more effective and more efficient killing machines, then I think you're going to see people, young people, and even people like me, absolutely embracing it.

Tucker “Cinco” Hamilton: I think the future fighter aircraft will be manned, yet linked into AI/ML powered autonomous systems that bolster the fighter pilot's battlefield safety, awareness, and capability. The future I see is one in which an operator is still fully engaged with battlefield decision making, yet being supported by technology through human-machine teaming.

As we develop and integrate AI/ML capability we must do so ethically. This is an imperative. Our warfighter and our society deserve transparent, ethically curated, and ethically executed algorithms. In addition, data must be transparently and ethically collected and used. Before AI/ML capability fully makes its way into combat applications we need to have established a strong and thoughtful ethical foundation.

Looking Ahead:

General Breedlove: Humans are training machines to do things, and machines are executing what they've been trained to do, as opposed to actually making independent, non-human aided decisions. I do believe we're in a timeframe now where there may be a person in the loop in certain parts of the engagement, but we're probably not very far off from a point in time when the human says, "Yep, that's the target. Hit it." Or the human takes the aircraft to a point where only the bad element is in front of it, and the decision concerning collateral damage has already been made, and then the human turns it completely over. But to the high-end extreme of, "launch an airplane and then see what happens next," kind of scenario, I think we're still a long way away from that. I think there are going to be humans in the engagement loop for a long time.

Tucker “Cinco” Hamilton: Autonomous systems are here to stay. Whether helping manage our engine operation or saving us from ground collision with the Automatic Ground Collision Avoidance System. As aircraft software continues to become more agile, these autonomous systems will play a part in currently fielded physical systems. This type of advancement is important and needed. However, AI/ML powered autonomous systems have limitations, and that's exactly where the operator comes in. We need to focus on creating capability that bolsters our fighter pilots, allowing them to best prosecute the attack, not remove them from the cockpit. If that is through keeping them safe, or pinpointing/identifying the correct target, helping alert them of incoming threats, or garnering knowledge of the battlefield — it's all about human-machine teaming. That teaming is exactly what the recent DARPA demonstration was about, proving that an AI powered system can help in situations even as dynamic as dogfighting.

Cipher Brief Intern Ben McNally contributed research for this report

Read more from General Breedlove (Ret.) on the future of AI in the cockpit exclusively in The Cipher Brief

Read more expert-driven national security insight, perspective and analysis in The Cipher Brief