SPONSORED — Red teaming is everywhere. The offensive security testing method is mentioned a dozen times in the recent artificial intelligence (AI) executive order released by President Joe Biden and accompanying draft guidance for United States’ federal agencies—and it’s a hot topic for global industry leaders and governments alike.

In the European Union, there’s a call to conduct adversarial testing in the interests of greater transparency and reporting. The Canadian government and Australian Signals Directorate were among the first in the world to table AI guidelines and laws. While a landmark collaboration in November 2023 between the United States Cybersecurity and Infrastructure Security Agency (CISA) and the United Kingdom National Cyber Security Centre (NCSC) released guidelines for secure AI system development aims to address the intersection of AI, cybersecurity and critical infrastructure.

It’s clear that regulators believe efforts to embrace AI could stall if security isn’t properly addressed early on—and for good reason: emulating real-world threat actors, including their tactics and techniques, can identify risks, validate controls and improve the security posture of an AI system or application.

But despite a general consensus that it’s essential to test AI systems, there’s limited clarity around what, how and when such testing should occur. Traditional red teaming—at least in the way the term is understood by security professionals—is not adequate for AI. AI demands a broader set of testing techniques, skill sets and failure considerations.

Looking for a way to get ahead of the week in cyber and tech? Sign up for the Cyber Initiatives Group Sunday newsletter to quickly get up to speed on the biggest cyber and tech headlines and be ready for the week ahead. Sign up today.

Adopt a Holistic Testing Approach

So, what does good look like when it comes to testing the security of generative (Gen) AI systems? Irrespective of the testing methodology and approach used, aligning the testing strategy and scope to a holistic threat model is key. In this way, testers can adopt a threat-informed approach to make sure that Gen AI safety systems and embedded controls, across the entire technology stack, are effective.

Furthermore, testing should include failure considerations across the technology stack, including the specific foundation model, application (user interface or UI), data pipelines, integration points, underlying infrastructure and the orchestration layer. Using this approach, testing for Gen AI not only involves prompt injection and data poisoning attacks but considers the specific implementation context of a given AI system.

Based on our experience, we have identified three primary ways for organizations to consume or implement Gen AI systems and how to test for the security of them:

- Consumers: Gen AI and large language models (LLM) are ready to consume and easy to access. Organizations can consume them through application programming interfaces, known as APIs, and tailor them, to a small degree, for their own use cases through engineering techniques, such as prompt tuning and prefix learning. Typically, the focus of security testing for consumers will be around how the Gen AI technology is being consumed within the boundaries of their network, such as the underlying infrastructure and user interface (e.g., chat bot) and how to avoid exposing data when using these services.

- Customizers: Most organizations identify a need to customize models. They may fine-tune them with their own data or combine them with other techniques, such as prompt engineering or retrieval-augmented generation where there’s no requirement to adjust the underlying model weights. This enables such models to better support specific downstream tasks across the business, limit hallucinations and generate more text-dependent responses. The testing boundaries for customizers will extend beyond the infrastructure or application layers to cover the business specific context, data and integrations.

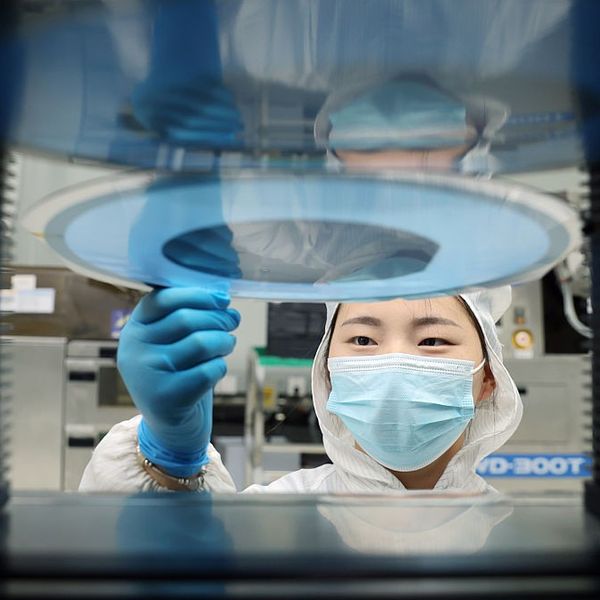

- Creators: A small subset of companies could develop pre-trained models with millions-to-billions of parameters that are core to the widespread enablement and increased adoption of AI models and AI system development. Large commercial or open-source models from the “creators” can be consumed and further customized by other organizations based on their specific business needs and use cases. The testing focus for creators will predominately be on their proprietary foundation models, or LLMs that are trained on broad data sets, as well as other relevant technology or integration points.

Looking To The Future—Security Testing at Scale

Most organizations are familiar with the manual testing approach of red teaming. They may also be applying adversarial testing, which involves systematically probing an AI system to identify points of weakness with the intent of learning how the AI system behaves when provided with malicious, harmful, or benign input.

Less common, however, is the use of continuous testing; after conducting at least one round of manual “point-in-time” testing, adopting a continuous testing approach for Gen AI is needed to monitor for changes in state that could result in the degradation of safety systems or performance over time.

While all of these methods are relevant, testing that takes place on an annual basis at a single point in time is insufficient to cope with the evolving, dynamic nature of Gen AI development pipelines. Instead, we believe organizations need to perform ongoing testing, similar to the security lifecycle approach of DevSecOps, where AI systems are developed and tested in a continuous integrated pipeline; thus, bringing a continuous integration and deployment approach to Gen AI model deployment.

Who’s Reading this? More than 500K of the most influential national security experts in the world. Need full access to what the Experts are reading?

Tap Into Talent

Policymaking is underway, but it takes time and is unlikely to enable organizations to keep pace with the new techniques necessary for security testing. Organizations should find ways that they can be agile enough to adopt and embrace these emerging techniques—resulting in talent demand changes.

There will be a requirement for different types of skills to undertake comprehensive security testing for Gen AI. For example, traditionally, red teaming may involve offensive security expertise and a wide array of skills in various technologies. But Gen AI security teaming requires a data scientist or AI engineer to join them and share the responsibility—someone who understands not only the business use case but also the model, how it works and how that model could be manipulated or bypassed as well as what the outputs will look like when manipulated or bypassed.

Reinventing Security Testing

Realizing the benefits of Gen AI, with trust and transparency, at speed, is not easy. In our experience, having a tactical execution plan and strategic roadmap to kick-start security testing for your Gen AI journey should prioritize the following actions:

- Adopt a holistic approach: Model threats for your Gen AI system to understand the risks throughout the AI development lifecycle and potential threats across the attack surface. Tailor the approach to your own organizational context, but don’t ignore the broader attack surface.

- Define a continuous AI testing program: After conducting an initial round of testing, using a point-in-time manual approach may not be scalable. Adopt a proactive, continuous testing approach to monitor for changes in state that could result in degradation of safety systems or performance over time, resulting in unexpected or undesired output.

- Create a dedicated cross-functional team: Establish a security testing team that accommodates the required specializations of the task in hand. Blend skills to reinvent security teaming and testing practices to manage AI evolution. Instead of simply applying a set of tools to the task, encourage the creativity to think like an adversary through the lens of an AI engineer.

Security testing should never be a one-and-done event. Maintaining a threat-informed approach to validate the efficacy and accuracy of Gen AI safety systems and embedded controls across the technology stack can help to establish a secure digital core that prepares organizations for AI implementation and ongoing innovations.

Addressing failure considerations that extend across the entire attack surface—whether common platforms, models, integration points, data pipelines, orchestration tooling, or underlying infrastructure—is a significant contribution toward the Holy Grail of responsible AI, the security posture of Gen AI systems and applications in an organization.

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals. Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief